The Logic and Limits of Event Studies in Securities Fraud Litigation

Event studies have become increasingly important in securities fraud litigation, and the Supreme Court’s 2014 decision in Halliburton Co. v. Erica P. John Fund, Inc. heightened their importance by holding that the results of event studies could be used to obtain or rebut the presumption of reliance at the class certification stage. As a result, getting event studies right has become critical. Unfortunately, courts and litigants widely misunderstand the event study methodology leading, as in Halliburton, to conclusions that differ from the stated standard.

This Article provides a primer explaining the event study methodology and identifying the limitations on its use in securities fraud litigation. It begins by describing the basic function of the event study and its foundations in financial economics. The Article goes on to identify special features of securities fraud litigation that cause the statistical properties of event studies to differ from those in the scholarly context in which event studies were developed. Failure to adjust the standard approach to reflect these special features can lead an event study to produce conclusions inconsistent with the standards courts intend to apply. Using the example of the Halliburton litigation, we illustrate the use of these adjustments and demonstrate how they affect the results in that case.

The Article goes on to highlight the limitations of event studies and explains how those limitations relate to the legal issues for which they are introduced. These limitations bear upon important normative questions about the role event studies should play in securities fraud litigation.

Introduction

In June 2014, on its second trip to the U.S. Supreme Court, Halliburton scored a partial victory.[1] Halliburton failed to persuade the Supreme Court to overrule its landmark decision in Basic Inc. v. Levinson,[2] which had approved the fraud-on-the-market (FOTM) presumption of reliance in private securities fraud litigation.[3] It did, however, persuade the Court to allow defendants to introduce evidence of lack of price impact at class certification.[4] As the Court explained, Basic “does not require courts to ignore a defendant’s direct, . . . salient evidence showing that the alleged misrepresentation did not actually affect the stock’s market price and, consequently, that the Basic presumption does not apply.”[5]

The concept of price impact[6] is a critical component of securities fraud litigation. Although Halliburton II considered price impact only in the context of determining plaintiffs’ reliance on fraudulent statements, price impact is critical to other elements of securities fraud, including loss causation, materiality, and damages. The challenge is how to determine whether fraudulent statements have affected stock price. This task is not trivial—stock prices fluctuate continuously in response to a variety of issuer and market developments as well as “noise” trading. To address the question, litigants use event studies.[7]

Event studies have their origins in the academic literature.[8] Financial economists use event studies to measure the relationship between stock prices and various types of events.[9] The core contribution of the event study is its ability to differentiate between price fluctuations that reflect the range of typical variation for a security and a highly unusual price impact that often may reasonably be inferred from a highly unusual price movement that occurs immediately after an event and has no other potential causes.[10]

Use of the event study methodology has become ubiquitous in securities fraud litigation.[11] Indeed, many courts have concluded that the use of an event study is preferred or even required to establish one or more of the necessary elements of the plaintiffs’ case.[12] But event studies present challenges in securities fraud litigation. First, it is unclear that courts fully understand event study methodology. For example, Justice Alito asked counsel for the petitioner at oral argument in Halliburton II:

Can I ask you a question about these event studies to which you referred? How accurately can they distinguish between . . . the effect on price of the facts contained in a disclosure and an irrational reaction by the market, at least temporarily, to the facts contained in the disclosure?[13]

Counsel responded to Justice Alito’s question by stating that: “Event studies are very effective at making that sort of determination.”[14] In reality, however, event studies can do no more than demonstrate highly unusual price changes. Event studies do not speak to the rationality of those price changes.

Second, event studies only measure the movement of a stock price in response to the release of unanticipated, material information. In circumstances in which fraudulent statements falsely confirm prior statements, the stock price would not be expected to move.[15] Event studies are not capable of measuring the effect of these so-called confirmatory disclosures on stock price.[16] Similarly, in cases involving multiple “bundled” disclosures, event studies have limited capacity to identify the particular contribution of each piece of information or the degree to which the effects of multiple disclosures may offset each other.[17]

Third, there are important differences between the scholarly contexts for which event studies were originally designed and the use of event studies in securities fraud litigation. For example, academics originally designed the event study methodology to measure the effect of a single event across multiple firms, the effects of multiple events at a single firm, or the effects of multiple events at multiple firms.[18] By contrast, an event study used in securities fraud litigation typically requires evaluating the impact of individual events on a single firm’s stock price.[19] These differences have important methodological implications. In addition, determining whether to characterize a price movement as highly unusual is the product of methodological choices, including choices about the level of statistical significance and thus statistical power. In the securities litigation context, those choices have normative implications that courts have not considered.[20] They also may have implications that are inconsistent with governing legal standards.[21]

In this Article, we examine the use of the event study methodology in securities fraud litigation. Part I demonstrates why the concept of a highly unusual price movement is central to a variety of legal issues in securities fraud litigation. Part II explains how event studies work. Part III conducts a stylized event study using data from the Halliburton litigation.[22] Part IV identifies the special features of securities fraud litigation that require adjustments to the standard event study approach and demonstrates how a failure to incorporate these features can lead to conclusions inconsistent with the standards intended by courts. Part V highlights methodological limitations of event studies—i.e., what they can and cannot prove. It also raises questions about whether the 5% significance level typically used in securities litigation is appropriate in light of legal standards of proof. Finally, this Part touches on normative implications that flow from the use of this demanding significance level.

A review of judicial use of event studies raises troubling questions about the capacity of the legal system to incorporate social science methodology, as well as whether there is a mismatch between this methodology and governing legal standards. Our analysis demonstrates that the proper use of event studies in securities fraud litigation requires care, both in a better understanding of the event study methodology and in an appreciation of its limits.

I. The Role of Event Studies in Securities Litigation

In this Part, we take a systematic look at the different questions that event studies might answer in a securities fraud case.[23] As noted above, the use of event studies in securities fraud litigation is widespread. As litigants and courts have become familiar with the methodology, they have used event studies to address a variety of legal issues.

The Supreme Court’s decision in Basic Inc. v. Levinson marked the starting point. In Basic, the Court accepted the FOTM presumption which holds that “the market price of shares traded on well-developed markets reflects all publicly available information, and, hence, any material misrepresentations.”[24] The Court observed that the typical investor, in “buy[ing] or sell[ing] stock at the price set by the market[,] does so in reliance on the integrity of that price.”[25] As a result, the Court concluded that an investor’s reliance could be presumed for purposes of a 10b-5 claim if the following requirements were met: (i) the misrepresentations were publicly known; (ii) “the misrepresentations were material”; (iii) the stock was “traded [i]n an efficient market”; and (iv) “the plaintiff traded . . . between the time the misrepresentations were made and . . . [when] the truth was revealed.”[26]

The Court’s decision in Basic was influenced by a law review article by Professor Daniel Fischel of the University of Chicago Law School.[27] Fischel argued that FOTM offered a more coherent approach to securities fraud than then-existing practice because it recognized the market model of the investment decision.[28] Although Basic focused on the reliance requirement, Fischel argued that the only relevant inquiry in a securities fraud case was the extent to which market prices were distorted by fraudulent information—it was unnecessary for the court to make separate inquiries into materiality, reliance, causation, and damages.[29] Moreover, Fischel stated that the effect of fraudulent conduct on market price could be determined through a blend of financial economics and applied statistics. Although Fischel did not use the term “event study” in this article, he described the event study methodology.[30]

The lower courts initially responded to the Basic decision by focusing extensively on the efficiency of the market in which the securities traded.[31] The leading case on market efficiency, Cammer v. Bloom,[32] involved a five-factor test:

(1) the stock’s average weekly trading volume; (2) the number of securities analysts that followed and reported on the stock; (3) the presence of market makers and arbitrageurs; (4) the company’s eligibility to file a Form S-3 Registration Statement; and (5) a cause-and-effect relationship, over time, between unexpected corporate events or financial releases and an immediate response in stock price.[33]

Economists serving as expert witnesses generally use event studies to address the fifth Cammer factor.[34] In this context, the event study is used to determine the extent to which the market for a particular stock responds to new information. Experts generally look at multiple information or news events—some relevant to the litigation in question and some not—and evaluate the extent to which these events are associated with price changes in the expected directions.[35]

A number of commentators have questioned the centrality of market efficiency to the Basic presumption, disputing either the extent to which the market is as efficient as presumed by the Basic court[36] or the relevance of market efficiency altogether.[37] Financial economists do not consider the Cammer factors to be reliable for purposes of establishing market efficiency in academic research.[38] Nonetheless, it has become common practice for both plaintiffs and defendants to submit event studies that address the extent to which the market price of the securities in question respond to publicly reported events for the purpose of addressing Basic’s requirement that the securities were traded in an efficient market.[39]

Basic signaled a broader potential role for event studies, however. By focusing on the harm resulting from a misrepresentation’s effect on stock price rather than on the autonomy of investors’ trading decisions, Basic distanced federal securities litigation from the individualized tort of common law fraud.[40] In this sense, Basic was transformative—it introduced a market-based approach to federal securities fraud litigation.[41] Price impact is a critical component of this approach because absent an impact on stock price, plaintiffs who trade in reliance on the market price are not defrauded. As the Supreme Court subsequently noted in Halliburton II, “[i]n the absence of price impact, Basic’s fraud-on-the-market theory and presumption of reliance collapse.”[42]

The importance of price impact extends beyond the reliance requirement. In Dura Pharmaceuticals,[43] the plaintiffs, relying on Basic, filed a complaint in which they alleged that at the time they purchased Dura stock, its price had been artificially inflated due to Dura’s alleged misstatements.[44] The Supreme Court reasoned that while artificial price inflation at the time of the plaintiffs’ purchase might address the reliance requirement, plaintiffs were also required to plead and prove the separate element of loss causation.[45] Key to the Court’s reasoning was that purchasing at an artificially inflated price did not automatically cause economic harm because an investor might purchase at an artificially inflated price and subsequently sell while the price was still inflated.[46]

Following Dura, courts allowed plaintiffs to establish loss causation in various ways, but the standard approach involved the use of an event study “to demonstrate both that the economic loss occurred and that this loss was proximately caused by the defendant’s misrepresentation.”[47] Practically speaking, plaintiffs in the post-Dura era need to plead price impact both at the time of the misrepresentation[48] and on the alleged corrective disclosure date. However, in Halliburton I,[49] the Supreme Court explained that plaintiffs do not need to prove loss causation to avail themselves of the Basic presumption since this presumption has to do with “transaction causation”—the decision to buy the stock in the first place, which occurs before any evidence of loss causation could exist.[50]

Plaintiffs responded to Dura’s loss causation requirement by presenting event studies showing that the stock price declined in response to an issuer’s corrective disclosure. As the First Circuit recently explained: “The usual—it is fair to say ‘preferred’—method of proving loss causation in a securities fraud case is through an event study . . . .”[51]

Proof of price impact for purposes of analyzing reliance and causation also overlaps with the materiality requirement.[52] The Court has defined material information as information that has a substantial likelihood to be “viewed by the reasonable investor as having significantly altered the ‘total mix’ of information made available.”[53] Because market prices are a reflection of investors’ trading decisions, information that is relevant to those trading decisions has the capacity to impact stock prices, and similarly, information that does not affect stock prices is arguably immaterial.[54] As the Third Circuit explained in Burlington Coat Factory:[55] “In the context of an ‘efficient’ market, the concept of materiality translates into information that alters the price of the firm’s stock.”[56] Event studies can be used to demonstrate the impact of fraudulent statements on stock price, providing evidence that the statements are material.[57] The lower courts have, on occasion, accepted the argument that the absence of price impact demonstrates the immateriality of alleged misrepresentations.[58]

A statement can be immaterial because it is unimportant or because it conveys information that is already known to the market.[59] The latter argument is known as the “truth on the market” defense since the argument is that the market already knew the truth. According to the truth-on-the-market defense, an alleged misrepresentation that occurs after the market already knows the truth cannot change market perceptions of firm value because any effect of the truth will already have been incorporated into the market price.[60]

In Amgen,[61] the parties agreed that the market for Amgen’s stock was efficient and that the statements in question were public, but they disputed the reasons why Amgen’s stock price had dropped on the alleged corrective disclosure dates.[62] Specifically, the defendants argued that because the truth regarding the alleged misrepresentations was publicly known before plaintiffs purchased their shares, plaintiffs did not trade at a price that was impacted by the fraud.[63] Although the majority in Amgen concluded that proof of materiality was not required at the class certification stage, it acknowledged that the defendant’s proffered truth-on-the-market evidence could potentially refute materiality.[64]

Proof of economic loss and damages also overlaps proof of loss causation. For plaintiffs to recover damages, they must show that they suffered an economic loss that was caused by the alleged fraud.[65] The 1934 Act provides that plaintiffs may recover actual damages, which must be proved.[66] A plaintiff who can prove damages has obviously proved she sustained an economic loss. At the same time, a plaintiff who cannot prove damages cannot prove she suffered an economic loss. Thus the economic loss and damages elements merge into one. A number of courts have rejected testimony or reports by damages experts that failed to include an event study.[67]

Notably, while the price impact at the time of the fraud (required in order to obtain the Basic presumption of reliance) is not the same as price impact at the time of the corrective disclosures (loss causation under Dura),[68] in many cases, the parties may seek to address both elements with a single event study. This is most common in cases that involve alleged fraudulent confirmatory statements. Misrepresentations that falsely confirm market expectations will not lead to an observable change in price.[69] But this does not mean they have no price impact. As the Second Circuit explained in Vivendi,[70] “a statement may cause inflation not simply by adding it to a stock, but by maintaining it.”[71] The relevant price impact is simply counterfactual: the price would have fallen had there not been fraud.[72]

In cases where plaintiffs allege confirmatory misrepresentations, event study evidence has no probative value related to the alleged misrepresentation dates since the plaintiffs’ own allegations predict no change in price. Thus there will be no observed price impact on alleged misrepresentation dates. However, a change in observed price will ultimately occur when the fraud is revealed via corrective disclosures. That is why it is appropriate to allow plaintiffs to use event studies concerning dates of alleged corrective disclosures to establish price impact for cases involving confirmatory alleged misrepresentations. A showing that the stock price responded to a subsequent corrective disclosure can provide indirect evidence of the counterfactual price impact of the alleged misrepresentation.[73] Such a conclusion opens the door to consideration of the type of event study conducted for purposes of loss causation, as we discuss below.[74]

Halliburton II presented this scenario. Plaintiffs alleged that Halliburton made a variety of fraudulent confirmatory disclosures that artificially maintained the company’s stock price.[75] Initially, defendants had argued that the plaintiff could not establish loss causation because Halliburton’s subsequent corrective disclosures did not impact the stock price.[76] When the Supreme Court held in Halliburton I that the plaintiffs were not required to prove loss causation on a motion for class certification,[77] “Halliburton argued on remand that the evidence it had presented to disprove loss causation also demonstrated that none of the alleged misrepresentations actually impacted Halliburton’s stock price, i.e., there was a lack of ‘price impact,’ and, therefore, Halliburton had rebutted the Basic presumption.”[78] Halliburton attempted to present “extensive evidence of no price impact,” evidence that the lower courts ruled was “not appropriately considered at class certification.”[79]

The Supreme Court disagreed. In Halliburton II, Chief Justice Roberts explained that the Court’s decision was not a bright-line choice between allowing district courts to consider price impact evidence at class certification or requiring them to consider the issue at a later point in trial; price impact evidence from event studies was often already before the court at the class certification stage because plaintiffs were using event studies to demonstrate market efficiency, and defendants were using event studies to counter this evidence.[80] Under these circumstances, the Chief Justice concluded that prohibiting a court from relying on this same evidence to evaluate whether the fraud affected stock price “makes no sense.”[81]

Because the question of price impact itself is unavoidably before the Court upon a motion for class certification, the Chief Justice explained that the Court’s actual choice concerned merely the type of evidence it would allow parties to use in demonstrating price impact on the dates of alleged misrepresentations or alleged corrective disclosures. “The choice . . . is between limiting the price impact inquiry before class certification to indirect evidence”—evidence directed at establishing market efficiency in general—“or allowing consideration of direct evidence as well.”[82] The direct evidence the Court’s majority determined to allow—concerning price impact on dates of alleged misrepresentations and alleged corrective disclosures—will typically be provided in the form of event studies.

On remand, the trial court considered the event study submitted by Halliburton’s expert, which purported to find that neither the alleged misrepresentations nor the corrective disclosures[83] identified by the plaintiff impacted Halliburton’s stock price.[84] After carefully considering the event studies submitted by both parties, which addressed six corrective disclosures, the court found that Halliburton had successfully demonstrated a lack of price impact as to five of the dates and granted class certification with respect to the December 7 alleged corrective disclosure.[85] For several dates, this conclusion was based on the district court’s determination that the event effects were statistically insignificant at the 5% significance level (equivalently, at the 95% confidence level).[86]

Following Halliburton II, several other lower courts have considered defendants’ use of event studies to demonstrate the absence of price impact. In Local 703, I.B. of T. Grocery v. Regions Financial Corp.,[87] the court of appeals concluded that the defendant had provided evidence that the stock price did not change in light of the misrepresentations and that the trial court, acting prior to Halliburton II, “did not fully consider this evidence.”[88] Accordingly, the court vacated and “remand[ed] for fuller consideration . . . of all the price-impact evidence submitted below.”[89] On remand, defendants argued that they had successfully rebutted the Basic presumption by providing evidence of no price impact on both the misrepresentation date and the date of the corrective disclosure.[90] The trial court disagreed. The court reasoned that the defendants’ own expert conceded that the 24% decline in the issuer’s stock on the date of the corrective disclosure was far greater than the New York Stock Exchange’s 6.1% decline that day and that given this discrepancy the defense had not shown the absence of price impact.[91] This decision places the burden of persuasion concerning price impact squarely on the defendants.[92]

In Aranaz v. Catalyst Pharmaceutical Partners Inc.,[93] the district court permitted the defendant an opportunity to rebut price impact at class certification.[94] The Aranaz court explained, however, that the defendant was limited to direct evidence that the alleged misrepresentations had no impact on stock price.[95] The defendants conceded that the stock price rose by 42% on the date of the allegedly misleading press release and fell by 42% on the date of the corrective disclosure[96] but argued that other statements in the two publications caused the “drastic changes in stock price.”[97] The court concluded that because the defendant had the burden of proving that “price impact is inconsistent with the results of their analysis,”[98] their evidence was not sufficient to show an absence of price impact. This determination as to the burden of persuasion tracks the approach taken by the Local 703 court discussed above. Further, following Amgen, the Aranaz court ruled that the truth-on-the-market defense would not defeat class certification because it concerns materiality and not price impact.[99]

The lower court decisions following Halliburton II demonstrate the growing importance of event studies. The most recent trial court decision as to class certification in the Halliburton litigation itself[100] demonstrates as well the challenges for the court in evaluating the event study methodology, an issue we will consider in more detail in Part III below.

Significantly, as reflected in the preceding discussion, proof of price impact is relevant to multiple elements of securities fraud. A single event study may provide evidence relating to materiality, reliance, loss causation, economic loss, and damages. Although such evidence might be insufficient on its own to prove one or more of these elements, event study evidence that negates any of the first three elements implies that plaintiffs will be unable to establish entitlement to damages. These observations explain why event studies play such a central role in securities fraud litigation.

Loss causation and price impact have taken center stage at the pleading and class certification stages. If the failure to establish price impact is fatal to the plaintiffs’ case, the defendants benefit by making that challenge at the pleading stage, before the plaintiffs can obtain discovery,[101] or by preventing plaintiffs from obtaining the leverage of class certification.[102] Accordingly, much of the Supreme Court’s jurisprudence on loss causation and price impact has been decided in the context of pretrial motions.

Basic itself was decided on a motion for class certification. A key factor in the Court’s analysis was the critical role that a presumption of reliance would play in enabling the plaintiff to address Rule 23’s commonality requirement.[103] As the Court explained, “[r]equiring proof of individualized reliance from each member of the proposed plaintiff class effectively would have prevented respondents from proceeding with a class action, since individual issues then would have overwhelmed the common ones.”[104] By facilitating class certification, Basic has been described as transforming private securities fraud litigation.[105]

Defendants have responded by attempting to increase the burden imposed on the plaintiff to obtain class certification. In Halliburton I, the lower courts accepted defendant’s argument that plaintiffs should be required to establish loss causation at class certification.[106] In Amgen, the defendants argued that the plaintiff should be required to establish materiality in order to obtain class certification.[107] Notably, in both cases, the defendants’ objective was to require the plaintiffs to prove price impact through an event study at a preliminary stage in the litigation rather than at the merits stage.

Similarly, the Court’s decision in Dura Pharmaceuticals was issued in the context of a motion to dismiss for failure to state a claim.[108] The complaint ran afoul of even the pre-Twombly[109] pleading standard by failing to allege that there had been any corrective disclosure associated with a loss.[110] The Dura Court held that the plaintiffs’ failure to plead loss causation meant that the complaint did not show entitlement to relief as required under Rule 8(a)(2).[111] In the post-Dura state of affairs, plaintiffs must identify both alleged misrepresentation and corrective disclosure dates to adequately plead loss causation. They would also be well-advised to allege that an expert-run event study establishes materiality, reliance, loss causation, economic loss, and damages. Failure to do so would not necessarily be fatal, but it would leave plaintiffs vulnerable to a Rule 12(b)(6) motion to dismiss. Given the importance of the event study in securities litigation, it is important to understand both the methodology involved and its limitations.

II. The Theory of Financial Economics and the Practice of Event Studies: An Overview

The theory of financial economics adopted by courts for purposes of securities litigation is based on the premise that publicly released information concerning a security’s price will be incorporated into its market price quickly.[112] This premise is known in financial economics as the semi-strong form of the “efficient market” hypothesis,[113] but we will refer to it simply as the efficient market hypothesis. Under the efficient market hypothesis, information that overstates a firm’s value will quickly inflate the firm’s stock price over the level that true conditions warrant. Conversely, information that corrects such inflationary misrepresentations will quickly lead the stock price to fall.

Financial economists began using event studies to measure how much stock prices respond to various types of news.[114] Typically, event studies focus not on the level of a stock’s price, but on the percentage change in stock price, which is known as the stock’s observed “return.” In its simplest form, an event study compares a stock’s return on a day when news of interest hits the market to the range of returns typically observed for that stock, taking account of what would have been expected given general changes in the overall market on that day. For example, if a stock typically moves up or down by no more than 1% in either direction but rises by 2% on a date of interest (after controlling for relevant market conditions), then the stock return moved an unusual amount on that date. What range is “typical,” and thus how large must a return be to be considered sufficiently unusual, are questions that event study authors answer using statistical significance testing.

A typical event study has five basic steps: (1) identify one or more appropriate event dates, (2) calculate the security’s return on each event date, (3) determine the security’s expected return for each event date, (4) subtract the actual return from the expected return to compute the excess return for each event date, and (5) evaluate whether the resulting excess return is statistically significant at a chosen level of statistical significance.[115] We treat these five steps in two sections.

A. Steps (1)–(4): Estimating a Security’s Excess Return

Experts typically address the first step (selecting the event date) by using the date on which the representation or disclosure was publicly made.[116] For purposes of public-market securities fraud, the information must be communicated widely enough that the market price can be expected to react to the information.[117] The second step (calculating a security’s actual return) requires only public information about daily security prices.[118]

The third step is to determine the security’s expected return on the event date, given market conditions that might be expected to affect the firm’s price even in the absence of the news at issue. Event study authors do this by using statistical methods to separate out components of a security’s return that are based on overall market conditions from the component due to firm-specific information. Market conditions typically are measured using a broad index of other stocks’ returns on each date considered in the event study or an index of returns of other firms engaged in similar business (since firms engaged in common business activities are likely to be affected by similar types of information). To determine the expected return for the security in question, an expert will estimate a regression model that controls for the returns to market or industry stock indexes.[119] The estimated coefficients from this model can then be used to measure the expected return for the firm in question, given the performance of the index variables included in the model.

The fourth step is to calculate the “excess return,”[120] which one does by subtracting the expected return from the actual return on the date in question. Thus the excess return is the component of the actual return that cannot be explained by market movements on the event date, given the regression estimates described above. So the excess return measures the stock’s reaction to whatever news occurred on the event date.

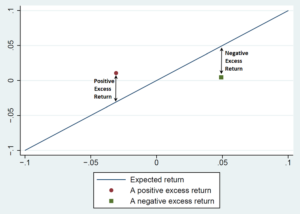

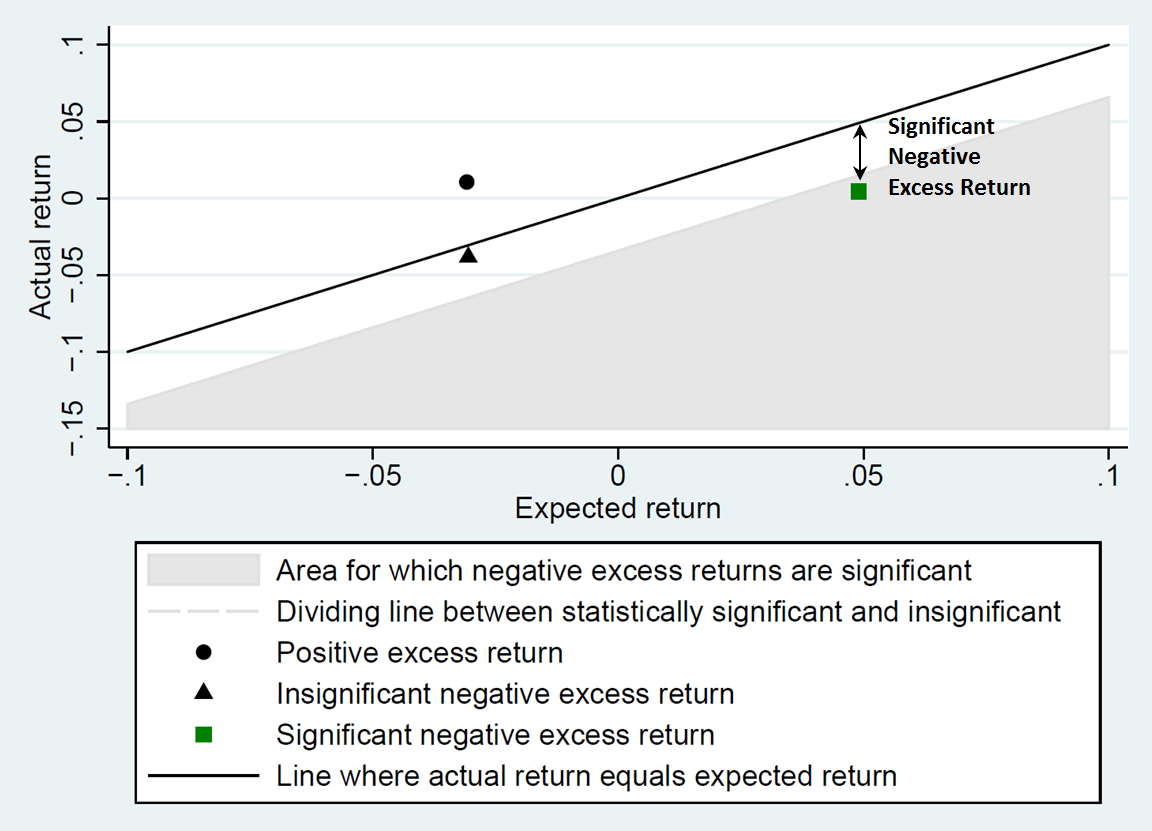

A positive excess return indicates that the firm’s stock increased more than would be expected based on the statistical model. A negative excess return indicates that the stock fell more than the model predicts it should have. Figure 1 illustrates the calculation of excess returns from actual returns and expected returns. The figure plots the stock’s actual daily return on the vertical axis and its expected daily return on the horizontal axis. The upwardly sloped straight line represents the collection of points where the actual and expected returns are equal. The magnitude of the excess return at a given point is the height between that point and the upwardly sloped straight line. The point plotted with a circle lies above the line where actual and expected returns are equal, so this point indicates a positive excess return. By contrast, at the point plotted with a square, the actual return is below the line where the actual and expected returns are equal, so the excess return is negative.

Figure 1: Illustrating the Calculation of Excess Returns

from Actual and Expected Returns

B. Step (5): Statistical Significance Testing in an Event Study

Our fifth and final step is to determine whether the estimated excess return is statistically significant at the chosen level of significance, which is frequently the 5% level. The use of statistical significance testing is designed to distinguish stock-price changes that are just the result of typical volatility from those that are sufficiently unusual that they are likely a response to the alleged corrective disclosure.

Tests of statistical significance all boil down to asking whether some statistic’s observed value is far enough away from some baseline level one would expect that statistic to take. For example, if one flips a fair coin 100 times, one should expect to see heads come up on roughly 50% of the flips, so the baseline level of the heads share is 50%. The hypothesis that the coin is fair, so that the chance of a heads is 50%, is an example of what statisticians call a null hypothesis: a maintained assumption about the object of statistical study that will be dropped only if the statistical evidence is sufficiently inconsistent with the assumption.

Since one can expect random variation to affect the share of heads in 100 coin flips, most scholars would find it unreasonable to reject the null hypothesis that the coin is fair simply because one observes a heads share of, say, 49% or 51%. Even though these results do not equal exactly the baseline level, they are close enough that most applied statisticians would consider this evidence too weak to reject the null hypothesis that the coin is fair.[121] On the other hand, common sense and statistical methodology suggest that if eighty-nine of 100 tosses yielded heads, it would be strong evidence that the coin was biased toward heads. A finding of eighty-nine heads would cause most scholars to reject the null hypothesis that the coin is fair.

Event study tests of whether a stock price moved in response to information are similar to the coin toss example. They seek to determine whether the stock’s excess return was highly unusual on the event date. The null hypothesis in an event study is that the news at issue did not have any price impact. Under this null hypothesis, the stock’s return should reflect only the usual relationship between the stock and market conditions on the event date. In other words, the stock’s return should be the expected return, together with normal variation. Our baseline expectation for the stock’s excess return is that it should be zero. Normal variation, however, will cause the stock’s actual return to differ somewhat from the expected return. Statistical significance testing focuses on whether this deviation—the actual excess return on the event date—is highly unusual.

What counts as highly unusual in securities litigation? Typically courts and experts have treated an event-date effect as statistically significant if the event-date’s excess return is among the 5% most extreme values one would expect to observe in the absence of any fraudulent activity.[122] In this situation, experts equivalently say that there is statistically significant evidence at the 5% level, or “at level 0.05,” or “with 95% confidence.”[123]

Implicit in this discussion of statistical significance is the scholarly norm of declaring that evidence that disfavors a null hypothesis is not strong enough to reject that hypothesis. Thus, applied statisticians often say that a statistically insignificant estimate is not necessarily proof that the null hypothesis is true—just that the evidence isn’t strong enough to declare it false. Such statisticians really have three categories of conclusion: that the evidence is strong enough to reject the null hypothesis, that the evidence is basically consistent with the null hypothesis, and that the evidence is inconsistent with the null hypothesis but not so much as to warrant rejection of the null hypothesis. One might think of such statisticians who use demanding significance levels such as the 5% level as starting with a strong presumption in favor of the null hypothesis so that only strong evidence against it will be deemed sufficient to reject the null hypothesis.

Whether an approach of adopting a strong presumption in favor of the defendant is consistent with legal standards in securities litigation is beyond the scope of this Article but it is a topic that warrants future discussion.[124] For purposes of this Article, though, we take the choice of the 5% significance level as given and seek to provide courts with the methodological knowledge necessary to apply that significance level properly.[125]

Experts typically assume that in the absence of any fraud-related event, a stock’s excess returns—that is, the typical variability not driven by the news at issue in litigation—will follow a normal distribution,[126] an issue we discuss in more detail in Part IV. For a random variable that follows a normal distribution, 95% of realizations of that variable will take on a value that is within 1.96 standard deviations of zero.[127] Experts assuming normality of excess returns and using the 95% confidence level often determine that the excess return is highly unusual if it is greater than 1.96 standard deviations. For example, if the standard deviation of a stock’s excess returns is 1.5%, an expert might declare an event date’s excess return statistically significant only if it is more than 2.94 percentage points from zero.[128] In this example, the expert has determined that the “critical value” is 2.94: any value of the event date excess return greater in magnitude than this value will lead the expert to determine that the excess return is statistically significant at the 5% level. A lower value for the excess return would lead to a finding of statistical insignificance.

When an event date excess return is statistically significant at the chosen significance level, courts will treat the size of the excess return as a measure of the price effect associated with the news at issue.[129] One consequence is that the excess return may then be used as a basis for determining damages. On the other hand, if the excess return is statistically insignificant at the chosen level, then courts find the statistical evidence too weak to meet the plaintiff’s burden of persuasion that the information affected the stock price.

Note that a statistically insignificant finding may occur even when the excess return is directionally consistent with the plaintiff’s allegations. In such a case, the evidence is consistent with the plaintiff’s theory of the case, but the size of the effect is too small to be statistically significant at the level used by the court. Such an outcome may sometimes occur even when the null hypothesis was really false, i.e., there really was a price impact due to the news on the event date.

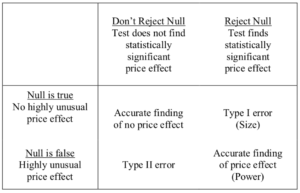

This last point hints at an inherent trade-off reflected in statistical significance testing. When one conducts a statistical significance test, there are four possible outcomes. These four categories of statistical inference are summarized in Table 1. Two of these are correct inferences: the test may fail to reject a null hypothesis that is really true, or the test may reject a null hypothesis that is really false. The first of these cases correctly determines that there was no price impact (the upper left box in Table 1). The second case correctly determines that there was a price impact (the lower right box in Table 1). Given that there really was a price impact, the probability of correctly making this determination is known as the test’s power.[130]

The other two outcomes are incorrect inferences. The first mistaken inference involves rejecting a null hypothesis that is actually true. This is known as a Type I error (top right box in Table 1). The probability of this result, given that the null hypothesis is true, is known as a test’s size.[131] The second incorrect inference is failing to reject a null hypothesis that is actually false (lower left box in Table 1); this is known as a Type II error.[132]

Table 1: Four Categories of Statistical Inference

A final issue related to statistical significance concerns who bears the burden of persuasion if the defendant seeks to use event study evidence to show that there was no price impact related to an alleged misrepresentation. Halliburton II states that “defendants must be afforded an opportunity before class certification to defeat the presumption through evidence that an alleged misrepresentation did not actually affect the market price of the stock.”[137] But the case does not announce what statistical standard will apply to defendants’ evidence. As Merritt Fox discusses, one view is that the defendant must present statistically significant evidence that the price changed in the direction opposite to the plaintiff’s allegations.[138] Alternatively, the defendant might have to present evidence that is sufficient only to persuade the court that its own evidence of the absence of price impact is more persuasive than the plaintiff’s affirmative evidence of price impact.[139]The trade-off that arises in statistical significance testing is simple: reducing a test’s Type I error rate means increasing its Type II error rate, and vice versa.[133] As noted above, event study authors usually use a confidence level of 95%, which is the same as a Type I error rate of 5%.[134] The Type II error rate associated with this Type I error rate will depend on the typical range of variability of excess returns, but it has recently been pointed out that insisting on a Type I error rate of 5% when using event studies in securities fraud litigation can be expected to cause very high Type II error rates.[135] Another way to put this is that event studies used in securities litigation are likely to have very low power—very low probability of rejecting an actually false null hypothesis—when we insist on keeping the Type I error rate as low as 5%.[136] We discuss this very important issue further in subpart V(C).

As Fox has noted in other work, the applicable legal standard will have considerable impact on the volume of cases that are able to survive beyond a preliminary stage.[140] Further, Fox points out, a variety of factors affect the choice of approach, including social policy considerations about the appropriate volume of securities fraud litigation.[141] The question of Rule 301’s applicability was appealed to the Fifth Circuit by the Halliburton parties, but the parties reached a proposed settlement before that court could issue its ruling.[142] A full discussion of these issues is beyond the scope of the present Article. For concreteness, we will simply follow the approach taken by the district court in the ongoing Halliburton litigation. While that court found “that both the burden of production and the burden of persuasion are properly placed on Halliburton,”[143] the court did not understand that burden allocation to require Halliburton to affirmatively disprove the plaintiff’s allegations statistically. Rather, Halliburton needed only to “persuade the Court that its expert’s event studies [were] more probative of price impact than the Fund’s expert’s event studies.”[144] The rest of the court’s opinion makes clear that this means treating both sides’ event studies as if they are testing whether the statistical evidence is sufficient to establish that there is statistically significant evidence of a price impact at the 5% level, as discussed above. We will therefore continue to concentrate on that approach throughout this Article.

The foregoing discussion summarizes the basic methodology of event studies as they are commonly used in securities litigation. In the next Part, we present our own stylized event study of dates involved in the ongoing Halliburton litigation both to illustrate the principles described above and to facilitate our Part IV discussion of important refinements that experts and courts should make to achieve consistency with announced standards. We raise the question of whether those standards are appropriate in Part V.

III. The Event Study as Applied to the Halliburton Litigation

This Part uses data and methods from the opinions and expert reports in the Halliburton case to illustrate and critically analyze the use of an event study to measure price impact. Our objective is, initially, to provide a basic application of the theory described in the preceding Part for those readers having limited familiarity with the operational details. Then, in Part IV, we identify several problems with the typical execution of the basic approach and demonstrate the implications of making the necessary adjustments to respond to these problems.

A. Dates and Events at Issue in the Halliburton Litigation

Plaintiffs in the Halliburton litigation alleged that between the middle of 1999 and the latter part of 2001,[145] Halliburton and several of the company’s officers—collectively referred to here as simply “Halliburton”—made false and misleading statements about various aspects of the company’s business.[146] The operative complaint, together with the report filed by plaintiffs’ experts, named a total of thirty-five dates on which either misrepresenting statements or corrective disclosures (or both) allegedly occurred.[147] For purposes of illustration, consider two of the allegedly fraudulent statements:

- Plaintiffs alleged that in a 1998 10-K report filed on March 23, 1999, Halliburton failed to disclose that it faced the risk of having to “shoulder the responsibility” for certain asbestos claims filed against other companies; further, plaintiffs alleged that Halliburton failed to correctly account for this risk.[148]

- On November 8, 2001, Halliburton stated in its Form 10-Q filing for the third quarter of 2001 that the company had an accrued liability of $125 million related to asbestos claims and that “[W]e believe that open asbestos claims will be resolved without a material adverse effect on our financial position or the results of operations.”[149] Plaintiffs also alleged that this representation was false and misleading.[150]

Both the alleged misrepresentations described above were confirmatory in the sense that the plaintiffs alleged that Halliburton, rather than accurately informing the market of negative news, falsely confirmed prior good news that was no longer accurate.[151] The alleged result was that Halliburton’s stock price was inflated because it remained at a higher level than it would have had Halliburton disclosed accurately. Since false confirmatory misrepresentations do not constitute “new” information—even under the plaintiffs’ theory—neither of the two statements above would have been expected to cause an increase in Halliburton’s market price. As a result, in considering the price impact of the alleged misrepresentations, the district court allowed the plaintiffs to focus on whether subsequent alleged corrective disclosures were associated with reductions in Halliburton’s stock price.[152]

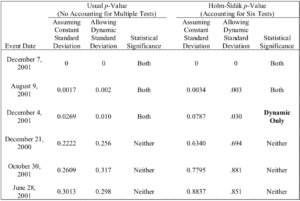

On July 25, 2015, the district court issued its most recent order and memorandum opinion concerning class certification.[153] By this point of the litigation, which had been ongoing for more than thirteen years, the event studies submitted by the parties’ experts[154] focused on six dates on which Halliburton had issued alleged corrective disclosures: December 21, 2000;[155] June 28, 2001;[156] August 9, 2001;[157] October 30, 2001;[158] December 4, 2001;[159] and December 7, 2001.[160]

The trial court concluded in its July 2015 decision, after weighing two competing expert reports, that five of these alleged corrective disclosures did not have a price impact that was statistically significant at the 5% level. For that reason, the district court denied class certification with respect to these five dates.[161] However, the district court found that the alleged corrective disclosure on December 7 was associated with a statistically significant price impact at the 5% level, in the direction necessary for plaintiffs to benefit from the Basic presumption. The court therefore certified a class action with respect to the alleged misrepresentations associated with December 7, 2001.[162]

B. An Illustrative Event Study of the Six Dates at Issue in the Halliburton Litigation

Following the approach outlined in Part II, we apply the event study to the six dates listed in subpart III(A). For our first step (selection of an appropriate event), we follow the parties and analyze the dates of the alleged corrective disclosures.[163]

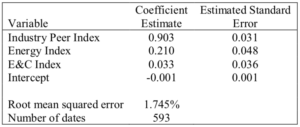

Next, we use the market model to construct Halliburton’s estimated return.[164] To account for factors outside the litigation likely associated with Halliburton’s stock performance, we followed the parties’ experts and estimated a market model with multiple reference indexes. The first such index, introduced by the defendants’ expert, is intended to track the performance of the S&P 500 Energy Index during the class period.[165] The plaintiffs’ expert pointed out that this index is dominated by “petroleum refining companies, not energy services companies like Halliburton.”[166] In his own market model, he therefore added a second index intended to reflect the performance of Halliburton’s industry peers.[167] We also included such an index.[168] Third, we included an index constructed to mimic the one the defendants’ expert constructed to reflect the engineering and construction aspects of Halliburton’s business.[169] Because we found that the return on the S&P 500 overall index added no meaningful explanatory power to the model, we did not include it.

The resulting market model estimates[170] are set forth in Table 2.[171] These estimates indicate that Halliburton’s daily stock return moves nearly one-for-one with the industry peer index constructed from analyst reports—a one percentage point increase in the industry peer index return is associated with roughly a 0.9-point increase in Halliburton’s return. This makes the industry peer index a good tool for estimating Halliburton’s expected return in the absence of fraud. The energy index return is much less correlated with Halliburton’s stock return, with a coefficient of only about 0.2. Both the energy and industry peer index coefficients are highly statistically significant, with each being many multiples of its estimated standard error. By contrast, the return on the energy and construction index has essentially no association with Halliburton’s stock return and is statistically insignificant.

Table 2: Market Model Regression Estimates

We then use these market model coefficient estimates to calculate daily estimated excess returns for the six event dates excluded from estimation of the model. We calculated the contribution of each index to each date’s expected return by multiplying the index’s Table 2 coefficient estimate by the observed value of the index on the date in question. Then we summed up the three index-specific products just created and added the intercept (which is so low as to be effectively zero). The result is the event date expected return based on the market model, i.e., the variable plotted on the horizontal axis of Figure 1 and Figure 3. The excess return for each event date is then found by subtracting each date’s estimated expected return from its actual return. Table 3 reports the actual, estimated expected, and estimated excess returns for each of the six alleged corrective disclosure dates in the Halliburton litigation, sorted from most negative to least negative. The actual returns are all negative, indicating that Halliburton’s stock price dropped on each of the alleged corrective disclosure dates. On three of the dates, the estimated expected return was also negative, indicating that typical market factors would be expected to cause Halliburton’s stock price to fall, even in the absence of any unusual event. For the other three dates, market developments would have been expected to cause an increase in Halliburton’s stock price. This means the estimated excess returns on those dates will imply larger price drops than are reflected in the actual returns. Finally, the estimated excess return column in Table 3 shows that the estimated excess returns were negative on all six dates. Even on dates when Halliburton’s stock price would have been expected to fall based on market developments, it fell more than it would have been expected to.

We then use these market model coefficient estimates to calculate daily estimated excess returns for the six event dates excluded from estimation of the model. We calculated the contribution of each index to each date’s expected return by multiplying the index’s Table 2 coefficient estimate by the observed value of the index on the date in question. Then we summed up the three index-specific products just created and added the intercept (which is so low as to be effectively zero). The result is the event date expected return based on the market model, i.e., the variable plotted on the horizontal axis of Figure 1 and Figure 3. The excess return for each event date is then found by subtracting each date’s estimated expected return from its actual return. Table 3 reports the actual, estimated expected, and estimated excess returns for each of the six alleged corrective disclosure dates in the Halliburton litigation, sorted from most negative to least negative. The actual returns are all negative, indicating that Halliburton’s stock price dropped on each of the alleged corrective disclosure dates. On three of the dates, the estimated expected return was also negative, indicating that typical market factors would be expected to cause Halliburton’s stock price to fall, even in the absence of any unusual event. For the other three dates, market developments would have been expected to cause an increase in Halliburton’s stock price. This means the estimated excess returns on those dates will imply larger price drops than are reflected in the actual returns. Finally, the estimated excess return column in Table 3 shows that the estimated excess returns were negative on all six dates. Even on dates when Halliburton’s stock price would have been expected to fall based on market developments, it fell more than it would have been expected to.

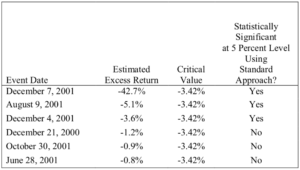

Table 3: Actual, Expected, and Excess Returns for Event Dates

For the moment, we adopt the standard assumption that Halliburton stock’s excess returns follow a normal distribution. Our Table 2 above reports that the root-mean-squared error for our Halliburton market model—which is an estimate of the standard deviation of excess returns—was 1.745%. Multiplying 1.96 and 1.745, we obtain a critical value of 3.42%.[172] In other words, in the absence of unusual events affecting Halliburton’s stock price and assuming normality, we can expect that 95% of Halliburton’s excess returns will take on values between ‒3.42% and 3.42%. For an alleged corrective disclosure date, excess returns must be negative to support the plaintiff’s theory, so a typical expert would determine that an event-date excess return drop of 3.42% or more is statistically significant.The next step is to test these estimated excess returns for statistical significance in order to determine whether they are unusual enough to meet the court’s standard for statistical significance.

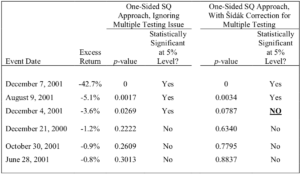

In the first column of Table 4, we again present the estimated excess returns from Table 3. The second column reports whether the estimated excess return is statistically significant at the 5% level based on the standard approach to testing described above. The event date estimated excess returns are statistically significant at the 5% level for December 7, 2001; August 9, 2001; and December 4, 2001; they are statistically insignificant at the 5% level for the other three dates.

Table 4: Standard Significance Testing for Event Dates

(sorted by magnitude of estimated excess return)

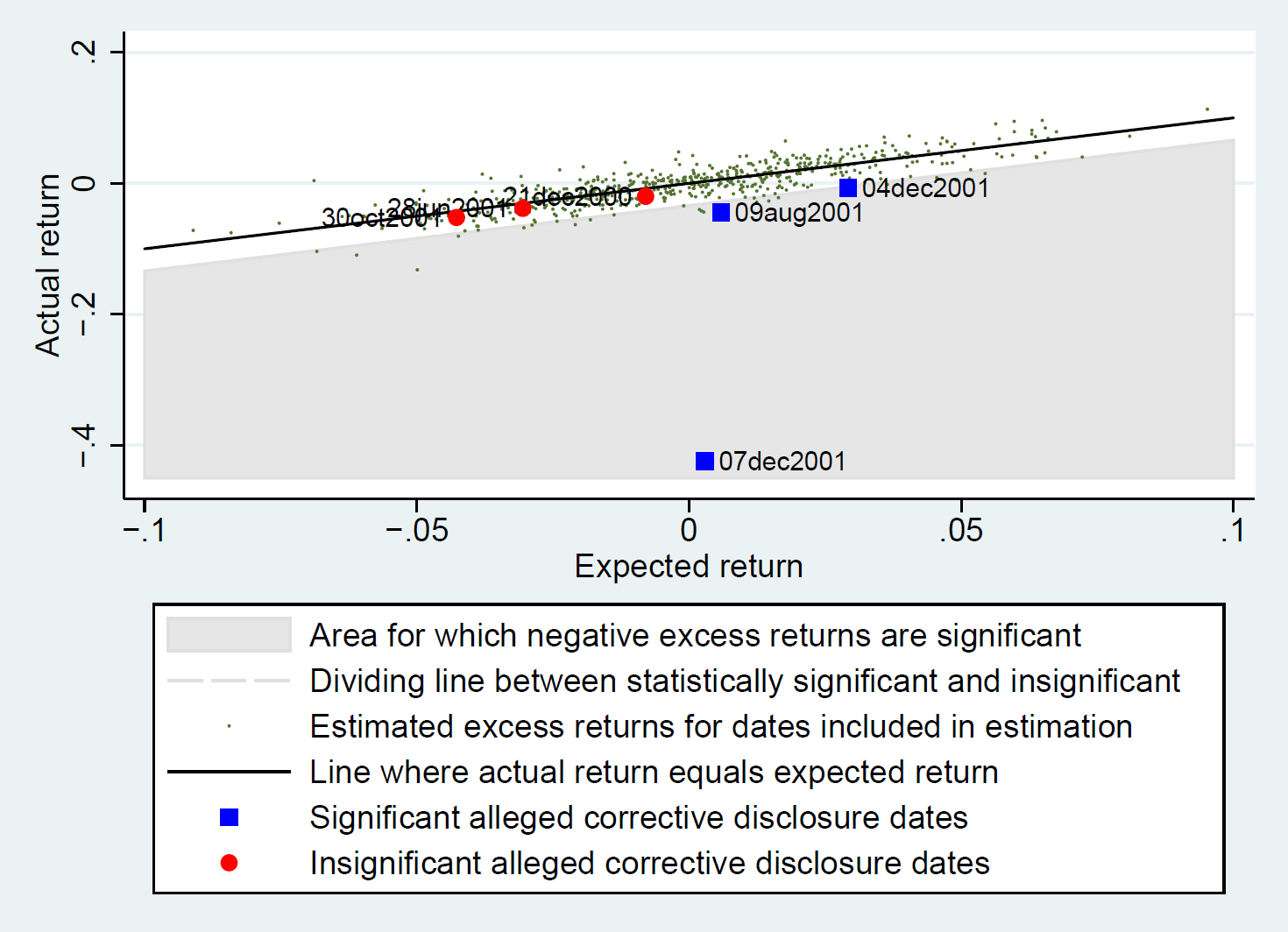

We can illustrate the standard approach by again using a graph that relates actual and expected returns. As in earlier figures, Figure 2 again plots the actual return on the vertical axis and the expected return on the horizontal axis (with the set of points where these variables are equal indicated using an upwardly sloped straight line). This figure also includes dots indicating the expected and actual return for each day in the estimation period—these are the dots that cluster around the upwardly sloped line.

Figure 2: Scatter Plot of Actual and Expected Returns for

Alleged Corrective Disclosure Dates

and for Observations in Estimation Period

In addition, the figure includes three larger circles and three larger squares. The circles indicate the alleged corrective disclosure dates for December 31, 2000; October 30, 2001; and June 28, 2001—the alleged corrective disclosure dates on which Table 4 tells us estimated excess returns were negative (below the upwardly sloped line) but not statistically significant according to the standard approach. The squares indicate the alleged corrective disclosure dates for which estimated excess returns were both negative and statistically significant at the 5% level. These are the three dates in the top three rows of Table 4—December 7, 2001; August 9, 2001; and December 4, 2001. We can tell that the price drops on these dates were statistically significant at the 5% level because they appear in the shaded region of the graph; as discussed in relation to Figure 3, infra, points in this region have statistically significant price drops at the 5% level according to the standard approach. In sum, our implementation of a standard event study shows price impact for three dates, and it fails to show such impact at the 5% level for the other three.

IV. Special Features of Securities Fraud Litigation and Their Implications for the Use of Event Studies

The validity of the standard approach to testing for statistical significance, at whatever significance level is chosen, relies importantly on four assumptions:

- Halliburton’s excess returns actually follow a normal distribution—that assumption is the source of the 1.96 multiplier for the standard deviation of Halliburton’s estimated excess returns in estimating the critical value.

- It is appropriate to use a multiplier that is derived by considering what would constitute an unusual excess return in either the positive or negative direction—i.e., an unusually large unexpected movement of the stock in either the direction of increase or the direction of decrease.

- It is appropriate to analyze each event date test in isolation without taking into account the fact that multiple tests (six in our Halliburton example) are being conducted.

- Under the null hypothesis, Halliburton’s excess returns have the same distribution on each date; under the first assumption (normality), this is equivalent to assuming that the standard deviation of Halliburton’s excess returns is the same on every date.

As it happens, each of these assumptions is false in the context of the Halliburton litigation. The court did take appropriate account of the falsity of the third assumption (involving multiple comparisons),[173] but it failed even to address the other three.

Violations of any of these assumptions will render the standard approach to testing for statistical significance unreliable. That is true even if these violations do not always cause the standard approach to yield incorrect conclusions—i.e., conclusions that differ from what reliable methods would yield—concerning statistical significance at the chosen significance level. Just as a stopped clock is right twice a day, an unreliable statistical method will yield the right answer sometimes.[174] But the law demands more—it demands a method that yields the right answer as often as asserted by those using the method.

In the remaining sections of this Part, we explain these four assumptions in more detail, and we show that they are unsustainable in the context of the Halliburton event study conducted in Part III.

A. The Inappropriateness of Two-Sided Tests

In a purely academic study, economic theory may not predict whether an event date excess return can be expected to be positive or negative. For example, an announced merger might be either good or bad for a firm’s market valuation. In such cases, statistical significance is appropriately tested by checking whether the estimated excess return is large in magnitude regardless of its sign. In other words, either a very large drop or a very large increase in the firm’s stock price constitutes evidence against the null hypothesis that the news had no impact on stock price. Such tests are known as “two-sided” tests of statistical significance since a large value of the excess return on either side of zero provides evidence against the null hypothesis.[175]

In event studies used in securities fraud litigation, by contrast, price must move in a specific direction to support the plaintiff’s case. For example, an unexpected corrective disclosure should cause the stock price to fall. Thus, tests of statistical significance based on event study results should be conducted in a “one-sided” way so that an estimated excess return is considered statistically significant only if it moves in the direction consistent with the allegations of the party using the study. The one-sided–two-sided distinction is one that courts and expert witnesses regularly miss, and it is an important one.

Figure 3 illustrates this point. As in Figure 1, the upwardly sloped line indicates the set of points where the actual and excess returns are equal. The shaded area in Figure 3 depicts the set of points where the actual return is far enough below the expected return—i.e., where the excess return is sufficiently negative—so that the excess return indicates a statistically significant price drop on the date in question.

Figure 3: Illustrating Statistical Significance of Excess Returns

Consider the points indicated by a circle and a square in Figure 3, which are equally far from the actual-equals-expected line but in opposite directions. The circle depicts a point that has a positive excess return. Even though the circle is sufficiently far away from the line, the point has the wrong sign for an alleged corrective disclosure date, and no court would consider such evidence a basis on which to find for the plaintiff. The square, in contrast, depicts an excess return that is both negative and sufficiently far below the expected return such that we conclude there was a statistically significant price drop at the chosen significance level—as would be necessary for a plaintiff alleging a corrective disclosure. Finally, consider the point indicated by a triangle. This point is in the direction consistent with the plaintiff’s allegations—a negative excess return for an alleged corrective disclosure—but at this point the actual and expected returns are too close for the excess return to be statistically significant at the chosen level. For an alleged corrective disclosure date, only the square would provide statistically significant evidence.

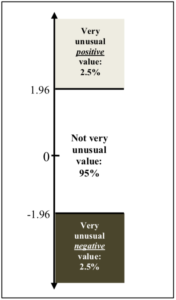

If no litigant would present evidence of a statistically significant price movement in the wrong direction, why does the two-sided approach matter? The reason is that the practical effect of this approach is to reduce the Type I error rate for the tests used in event studies from the stated level of 5% to half that size, i.e., to 2.5%. To see why, consider Figure 4. Higher points in the figure correspond to larger and more positive estimated excess returns. The shaded regions correspond to the sets of excess returns that are further from zero than the critical value of 1.96 standard deviations used by experts who deploy the two-sided approach. For each shaded region, the probability that a randomly chosen excess return will wind up in that region is 2.5%. Thus the probability an excess return will be in either region—and thus that the null hypothesis would be rejected if event study experts followed usual two-sided practice—is 5% in total, which is the desired Type I error rate.

Figure 4: The Standard Approach to Testing on an Alleged Corrective

Disclosure Date with a Type I Error Rate of 5%

(Measured in Standard Deviation Units)

However, on an alleged corrective disclosure date, the plaintiff’s allegation is that the price fell due to the revelation of earlier fraud. As noted, a finding that the date had an unusually large and positive excess return on that date would certainly not be credited to the plaintiff by the court. That is why only estimated excess returns that are large and negative are treated as statistically significant for proving price impact on an alleged corrective disclosure date. In other words, only estimated excess returns that are in the bottom shaded region in Figure 4 would meet the plaintiff’s burden. As we have seen, this region contains 2.5% of the probability when there is no actual effect of the news in question.[176] This means that a finding of statistical significance would occur only 2.5% of the time when the null hypothesis is true—or half as frequently as the 5% rate that courts and experts say they are attempting to apply.[177]

Although a reduction in Type I errors is desirable with all else held equal, as we discussed in subpart II(B), supra, there is a trade-off between Type I and Type II error rates. As a result of this trade-off, the Type II error rate of a test rises—possibly dramatically—as the Type I error rate is reduced. This means that using a Type I error rate of 2.5% in an event study induces many more false negatives than using a Type I error rate of 5%.[178]

This mistake is easily corrected. Rather than base the critical value on the two-sided testing approach, one simply uses a one-sided critical value. In terms of Figure 4, that means choosing the critical value so that a randomly chosen excess return would turn up in the bottom shaded region 5% of the time, given that the news of interest actually had no impact. Still maintaining the assumption that excess returns are normally distributed, the relevant critical value is –1.645 times the standard deviation of the stock’s excess returns.[179] In our application, this yields a critical value for an event date excess return of –2.87%; any excess return more negative than this value will yield a finding of statistical significance.[180] This is a considerably less demanding critical value than the –3.42% based on the two-sided approach. Consequently, switching to the one-sided test will correct an erroneous finding of no statistical significance at the 5% level whenever the estimated excess return is between –3.42% and –2.87%.

As it happens, none of the estimated excess returns in Table 4 has a value in this range, so correcting this error does not affect any of the statistical significance determinations we made in Part III for Halliburton. But that is just happenstance; had any of the estimated excess returns fallen in this range, our statistical significance conclusion would have changed. Further, Halliburton’s median daily market value was $17.6 billion over the estimation period, so the range of estimated excess returns that would have led to a switch—i.e., ‒3.42% to –2.87%—corresponds to a range of Halliburton market value of nearly $100 million. In other words, using the erroneous approach would, in the case of Halliburton, require a market value drop of almost $100 million more than should be required to characterize the drop as highly unusual.

B. Non-Normality in Excess Returns

Recall that, as discussed above, we characterize an excess return as highly unusual by looking at the distribution of excess returns on days when there is no news. The standard event study assumes that this distribution is normal.[181] There is no good reason, however, to assume that excess stock returns are actually normally distributed, and there is considerable evidence against that assumption.[182] Stocks’ excess returns often exhibit empirical evidence of skewness, “fat tails,” or both; and neither of these features would occur if excess returns were actually normal.[183]

In the case of Halliburton, we found strong evidence that the excess returns distribution was non-normal over the class period. Summary statistics indicate that Halliburton’s excess returns exhibit negative skew: they are more likely to have positive values than negative ones. Further, the distribution has fat tails, with values far from the distribution’s center than would be the case if excess returns were normally distributed. Formal statistical tests reinforce this story: Halliburton’s estimated excess returns systematically fail to follow a normal distribution over the estimation period.[184]

We illustrate the role of the normality assumption in Figure 5, which plots various probability density functions for excess returns. Roughly speaking, a probability density function tells us the frequency with which a given value of the excess return is observed. The probability of observing an excess return value less than, say, x is the area between the horizontal axis and the probability density function for all values less than x. The curve plotted with a solid line in the top part of Figure 5 is the familiar density function for a normal distribution (also known colloquially as a bell curve) with standard deviation equal to one. To the left of the point where the excess return is –1.645, the shaded area equals 0.05; this reflects the fact that a normal random variable will take on a value less than –1.645 standard deviations 5% of the time. To put it differently, the 5th percentile of standard normal distribution is –1.645; that is why we use this figure for the critical value to test for a price drop at a significance level of 5% when excess returns are normally distributed.

The curve plotted with a dashed line in the top part of Figure 5 is the probability density function for a different distribution. Compared to the standard normal distribution, the left-tail percentiles of this second distribution are compressed toward its center. That means fewer than 5% of this distribution’s excess returns will take on a value less than –1.645; the 5th percentile of this distribution is closer to zero, equal to roughly –1.36. Thus, when the distribution of excess returns is compressed toward zero relative to the normal distribution, we must use a more forgiving critical value—one closer to zero—to test for a significant price drop.

The bottom graph in Figure 5 again plots the standard normal distribution’s probability density function with a solid line. In contrast to the top graph, the curve plotted with a dashed line now depicts a distribution of excess returns for which left-tail percentiles are splayed out compared to the normal distribution. The 5th percentile is now –2.35, so that we must use a more demanding critical value—one further from zero—to test for significance.

As this discussion illustrates, the assumption that excess returns are normally distributed is not innocuous: if the assumption is wrong, an event study analyst might use a very different critical value from the correct one.

It might seem a daunting task to determine the true distribution of the excess return. However, Gelbach, Helland, and Klick (GHK) show that under the null hypothesis that nothing unusual happened on the event date, the estimated excess return for a single event date will have the same statistical properties as the actual excess return for that date.[185] This result provides a simple correction to the normality assumption: instead of using the features of the normal distribution to determine the critical value for statistical significance testing, we use the 5th percentile of the distribution of excess returns estimated using our market model.[186] GHK describe this percentile approach as the “SQ test” since the approach relies for its theoretical justification on the branch of theoretical statistics that concerns the behavior of sample quantiles, which, for our purposes, are simply observed percentiles.[187]

Figure 5: Illustrating Non-Normality

For a statistical significance test with a significance level of 5%, the SQ test entails using a critical value equal to the 5th percentile of the estimated excess returns distribution among non-event dates. Among the 593 non-event dates in our class period estimation sample, the 5th percentile is –3.08%.[188] According to GHK’s SQ test, then, this is the value we should use as the critical value for testing whether event date excess returns are statistically significant. Thus, when we drop the normality assumption and instead allow the distribution of estimated excess returns to drive our choice of critical values directly, we conclude that an alleged corrective disclosure date’s estimated excess return is statistically significant if it is less than –3.08%.

Note that this critical value is greater than the value of –2.87% found in subpart IV(A), supra, where we maintained the assumption of normality. Thus, relaxing the normality assumption has the effect of making the standard for a finding of statistical significance about 0.21 percentage points more demanding.[189] Although this correction does not affect our determination as to any of the six event dates in our Halliburton event study, it is nonetheless potentially quite important because 0.21 percentage points corresponds to a range of Halliburton’s market value of nearly $40 million.

As we discuss in our online Appendix A, the SQ test has both statistical and operational characteristics that make it very desirable. First, it involves estimating the exact same market model as the standard approach does. It requires only the trivial additional step of sorting the estimated excess return values for the class period in order to find the critical value—something that statistical software packages can do in one easy step in any case. The operational demands of using the SQ test are thus minor, and we think experts and courts should adopt it. And second, the SQ test not only is appropriate in many instances where the normality assumption fails but also is always appropriate when the normality assumption is valid. Thus there is no cost to using the SQ test, by comparison to the standard approach of assuming normality.

C. Multiple Event Dates of Interest

The approaches to statistical significance testing discussed above were all designed for situations involving the analysis of a single event date. As we have seen, however, there are six alleged corrective disclosure dates at issue in the Halliburton litigation. The distinction is important.

The more tests one does while using the same critical value, the more likely it is that at least one test will yield a finding of statistical significance at the stated significance level even when there truly was no price impact. More event dates means more bites at the same apple, and the odds the apple will be eaten up increase with the number of bites. At the same time, however, securities litigation differs from the example in that multiple events do not always relate to the same fraud. Corrective disclosures relating to different misstatements are different pieces of fruit. We discuss the multiple comparison adjustment first, in section 1, and then, in section 2, we explain an approach for determining when such an adjustment is warranted. In section 3, we address the very different statistical problem raised by a situation in which a plaintiff must prove both the existence of price inflation on the date of an alleged misrepresentation and the existence of a price drop on the date of an alleged corrective disclosure.[190]

1. When the question of interest is whether any disclosure had an unusual effect.—In our event study analysis so far, we have tested for statistical significance as if each of the six event dates’ estimated excess returns constituted the only one being tested. As mentioned above, this means the probability of finding at least one event date’s estimated excess return significant will be considerably greater than the desired Type I error rate of 5%. The defendants raised the multiple comparison issue in the Halliburton litigation, and it played a substantial role in the court’s analysis.[191]