Does Lawyering Matter? Predicting Judicial Decisions from Legal Briefs, and What That Means for Access to Justice

This study uses linguistic analysis and machine-learning techniques to predict summary judgment outcomes from the text of the briefs filed by parties in a matter. We test the predictive power of textual characteristics, stylistic features, and citation usage, and we find that citations to precedent—their frequency, their patterns, and their popularity in other briefs—are the most predictive of a summary judgment win. This finding suggests that good lawyering may boil down to good legal research. However, good legal research is expensive, and the primacy of citations in our models raises concerns about access to justice. Here, our citation-based models also suggest promising solutions. We propose a freely available, computationally enabled citation identification and brief bank tool, which would extend to all litigants the benefits of good lawyering and open up access to justice.

Introduction

Lawyers matter. Repeated studies have shown that represented parties achieve better civil litigation outcomes than their pro se counterparts, leading some to conclude that there can be no “meaningful access to justice” without access to lawyers.[1] In criminal cases, the Founders deemed a defendant’s right to counsel so important that they enshrined it in the Sixth Amendment, recognizing the need “to save innocent defendants from erroneous convictions.”[2] And in a decidedly more contemporary example, the development of artificially intelligent systems that can fight traffic tickets,[3] write and review contracts,[4] and provide legal advice,[5] has triggered a wave of dire predictions about the costs of a lawyerless justice system.[6]

But specifically, how and why lawyers matter is less clear.[7] Some scholars suggest that a lawyer’s mere presence in a case—regardless of his or her attributes, experience, or skill—signals to adversaries and the court that the case is meritorious.[8] Others argue that lawyers’ attributes, particularly their race, ethnicity, gender, and class, send even louder signals about their clients’ power and entitlement to a win.[9]

A different strand of the literature isolates and studies various aspects of lawyering, as distinct from the mere fact of a lawyer’s appearance in a case. A lawyer’s knowledge and use of various evidentiary procedures,[10] for example, as well as his or her previous experience[11] and “relational expertise” in a particular court,[12] have been shown to be positively correlated with successful client outcomes.

We add to this body of research by studying another aspect of lawyering: lawyers’ written advocacy to the courts in the form of legal briefs. The assumption that lawyers’ research and writing matters is legal education bedrock. Law students learn research and writing basics in their first year of law school and refine those skills throughout their legal education. Lawyers—and their clients—spend thousands of dollars on legal treatises and access to legal research databases such as LexisNexis and Westlaw. Continuing legal education courses serve to keep members of the bar up to date on recent case law developments. Collectively, these activities train lawyers in a set of norms and practices that are assumed to be the most effective for client advocacy. Yet, the effect of such training can be difficult to quantify, and the efficacy of conventional wisdom difficult to test.

In the present study, we use linguistic analysis and machine-learning techniques to mine the text of plaintiffs’ and defendants’ summary judgment briefs filed in 444 employment law cases in federal court. Through this analysis, we attempt to quantify the role that lawyers’ research and writing plays in influencing litigation outcomes. With respect to writing, we use textual characteristics, such as sentence length and brief length, along with stylistic features, such as the use of intensifiers (e.g., “obviously,” “clearly”), to predict the outcome of a motion for summary judgment. To measure the role of legal research, we test whether the number of citations, number of string citations, presence of specific important citations, and networks of citations across briefs can predict a judge’s decision. To establish a baseline for these experiments, we also review the body of legal research and writing (LRW) literature and form a set of hypotheses about which legal research and writing characteristics we would expect to be most and least effective.

Our results confirm some, though not all, of the conventional wisdom. The results generally support the advice from LRW scholars to convey the weight of the legal authority through comprehensive citations but to avoid lengthy string cites. Consistent with received wisdom, the results suggest that “positive intensifiers”—words like “precisely” or “fatal”—generally helped a brief. But contrary to received wisdom, so did “negative intensifiers” often associated with poor writing, such as “clearly” or “obviously”—although the effect was much weaker. And while LRW experts generally caution against excessively long sentences, our results found that average sentence length was not predictive of judicial outcomes.

However, the strongest results involved the citations themselves, suggesting that legal research plays a central role in brief writing. The presence of citations to particular cases in a brief boosted the performance of our predictive models. We also found that citing to cases commonly cited by other briefs in the sample tended to predict success, while citing idiosyncratic cases was a losing strategy. The most predictive model we tested involved grouping briefs according to common citations using network or graph analysis. We identified “neighborhoods” of winning briefs and could estimate a brief’s success based on the success of its case-citation neighbors. Collectively, these results suggest that legal research is one of the most important tasks a lawyer performs in motion practice: finding the right citations, in the right numbers, and presenting them effectively (rather than merely stringing them together) to the court.

While this result may seem unremarkable at first glance, citations’ importance in predicting summary judgment outcomes suggests a worrisome, yet depressingly familiar, story about access to justice. Resources matter, or more specifically, well-resourced lawyering matters. Choosing to include many citations, and choosing the right citations to include, requires access to Westlaw, LexisNexis, or other costly research databases. Even the Public Access to Court Electronic Records (PACER) system, the federal courts’ document access portal, offers only limited search capability and charges per page to download legal documents.[13] Other sources, like Google Scholar, offer free access to court decisions, but coverage is not comprehensive.[14] Further, research is time-intensive, and only well-resourced clients may be able to afford hours upon hours of lawyer time researching a brief. Lawyers at large firms also have access to extensive internal brief banks unavailable to self-represented parties and practitioners in legal aid organizations, solo practice, or small firms.

Nevertheless, our study also suggests a path to level the playing field. If access to court documents—both the parties’ briefs and the judges’ decisions—were universal and free, then methods like ours could be used to create an open access, computationally enabled brief bank or citation recommendation tool. Using network-analysis methods like the ones employed in this Essay, the bank could enable practitioners to “locate” their briefs in relation to sets of winning briefs in the same legal subfield and discover clusters of winning citations.

Such a tool would be particularly valuable for resource-strapped lawyers filing or responding to motions for summary judgment in areas with complex jurisprudence, bringing down the cost of legal representation and opening up access to justice. It could also serve as a decision-support tool for judges and their clerks, who could check whether parties omitted relevant case law commonly cited in other briefs. Lastly, tools of this sort could substantially reduce the transaction costs of motion practice, increasing the efficiency of the legal system.[15]

This Essay proceeds as follows: Part I provides a review of the extant text-analytics literature and an overview of the theoretical model underlying our approach. Part II presents an overview of our methodology, followed by the results in Part III. Finally, Part IV contextualizes the results, addresses access-to-justice implications, and describes our proposed computationally enabled brief bank solution.

I. Literature Review

This project falls within an emerging field of study known as computational legal studies, or legal analytics. Drawing from a variety of fields, including computational linguistics, computational social sciences, natural-language processing, computer science, and data science, computational legal scholars use advances in computing power and methods to analyze large, unstructured bodies of text to detect patterns and derive insight.

Text-analytic techniques have been applied to a wide variety of texts,[16] including legal documents. For example, law professor Nina Varsava examined judges’ writing style in a large set of opinions and found—contrary to conventional writing wisdom—that lengthy opinions written in a formal style were more likely to be cited by other judges than shorter, more readable opinions.[17]

Recent research has shown that decisions set forth in published opinions can often be predicted by machine-learning models trained on the texts of the statements of facts within those opinions.[18] However, critics of this work have observed that fact statements in published opinions are typically highly selective summaries of the original case record, written by the decision-makers themselves and tailored for consistency with the decision. Indeed, similar studies using documents drafted by self-represented litigants revealed that such documents are poor predictors of judicial outcomes.[19]

With respect to citations in particular, scholars have studied patterns in courts’ citations to precedent, tracing the ways in which citations “travel” across jurisdictions and years and identifying the most influential citation sets. The main focus of this work has been the U.S. Supreme Court’s and the U.S. Circuit Courts of Appeals’ use of and citation to Supreme Court decisions.[20] For example, Ian Carmichael and his coauthors used network analysis to discover that the Supreme Court tends to favor recent over older citations, and—perhaps contrary to intuition—shows no clear preference for citing unanimous opinions over those with concurrences and dissents.[21]

Text analytics can also serve as a window into lawyering practices as well as the underlying strategies of legal counsel. Political scientist Jessica Schoenherr constructed dictionaries of positive, neutral, and negative words that lawyers use in citing cases to analyze how lawyers use these cases in Supreme Court briefs.[22] Another study analyzed 318 closing arguments in tobacco litigation and found that tobacco companies made frequent reference to the plaintiff’s “decision” not to “quit smoking” despite “warnings” and “risks.”[23] Still another study examined terms of service contracts for “gig economy” companies and found that companies at higher risk of being sued for contract misclassification were more likely to include provisions intended to mitigate misclassification risk.[24] In other words, the presence of risk-mitigating language revealed the drafting lawyer’s underlying—and undisclosed—concern about litigation.

Even the length of text can be revealing.[25] In an analysis of internal corporate email at Enron, Eric Gilbert found that certain phrases and words tended to be associated with writing to subordinates, such as “have you been” or “I hope you.”[26] Other phrases were associated with writing to superiors, such as “attach,” and “thought you would.”[27] Psychology researcher James Pennebaker, who has written many influential articles and books on language use and social behavior, likewise found that those with high status in a group are less likely to use the words “I,” “me,” and “my” and more likely to use “we” or “you.”[28]

While legal scholars have devoted substantial attention to analyzing judicial opinions using text analytics—and to some extent oral argumentation—little attention has been paid to the text of legal briefs, where much of civil legal argumentation occurs. This is likely because of briefs’ relative inaccessibility, particularly in bulk. Major commercial legal research services like Westlaw, LexisNexis, and Bloomberg Law, to which most law faculty and students have free access, require each brief to be downloaded individually; the federal courts’ PACER system also requires individual, piecemeal downloads and charges ten cents per page.

Due to these barriers to accessibility, our study chose only one area of law—employment—and used law students to search for and download a random sample of all summary judgment decisions issued by U.S. district courts and the associated parties’ briefs in a ten-year period, 2007–2018. We then applied a variety of text analytics techniques to the brief-opinion sets for the purpose of predicting the outcome of the summary judgment motions.

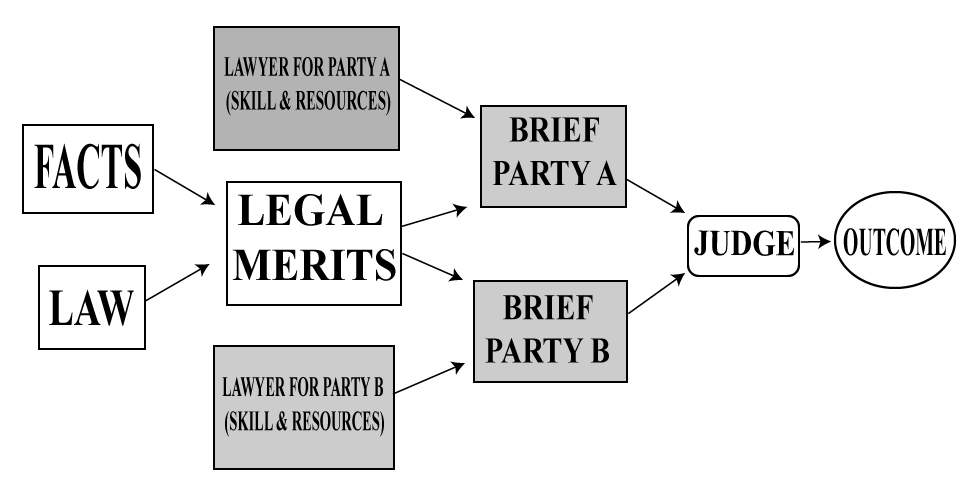

Our approach draws upon the theoretical model shown in Figure 1. The model illustrates the range of factors that may influence outcomes in motion practice. A judge’s decision might be a function of the underlying merits of the case, as presented by the lawyers in their briefing (and to a lesser extent, in oral arguments). Our model also assumes that the outcome depends on the skill and resources of the lawyers who prepare the briefs. A skilled lawyer with time and access to legal databases, treatises, or brief banks containing relevant precedent (depicted in the model as “resources”), might enhance a client’s prospects through skillful argumentation, sufficient citations to legal precedent, useful portrayal and contextualizing of the legal precedent, or characterization of the facts in a manner that favors their client. Conversely, a lawyer who is inexperienced, hurried, a bad writer, or one with very limited access to legal databases and other resources, might impair their client’s chances through the brief they file with the court.

Figure 1: Theoretical Model Illustrating the Role of Lawyers, Judges,

and Underlying Merits in Legal Outcomes

The theoretical model further reveals that it is difficult to disentangle legal merits from lawyering skills and resources through the analysis of legal texts. The language contained in a brief typically reflects both the underlying merits of the client’s case and whatever the lawyer adds or detracts from those merits (if anything at all). For example, the presence of many citations could signal strong legal merits, but it also could be attributable to the resources available to that lawyer, such as the luxury of time to conduct legal research or the availability of a large bank of similar briefs drafted by other lawyers at the firm.

The theoretical model also reveals the limits of our textual approach. The outcome—contained in an opinion written by the judge—reflects a combination of the judge’s judicial approach,[29] the underlying merits (revealed through the parties’ briefs), and whatever the lawyers add or detract through their briefs. However, our dataset does not include variables to account for a judge’s particular approach. Our model also does not account for structural factors that can affect the outcome, such as implicit or overt discrimination, access to legal counsel, or laws that systemically disfavor certain litigants or claims.

Our textual approach is further limited with respect to evaluating the efficacy of received wisdom with respect to legal research and writing. Most advice from legal research and writing scholars does not neatly translate into variables that can be measured through text analytics. For example, LRW scholars provide guidance about structuring the document as a whole, as well as the sequence of argumentation even within paragraphs (the familiar “IRAC” or “CREAC” construction of introduction, rule, application, and conclusion).[30] These dimensions of legal writing were too subtle to analyze using our methodology.

We were, however, able to test several lessons about effective research and writing mined from the LRW literature, which are summarized alongside the results section in Part III.

II. Methodology

A. Corpus

The Summary Judgment Corpus (SJC) that is the subject of our study consists of a random subset of cases involving summary judgment motions in 864 federal employment cases for the years 2007–2018.[31] Our team gathered the briefs and opinions via Bloomberg Law, which provides access to court documents via the federal courts’ PACER system.[32] Due to the resource-intensive nature of the document-gathering process, we selected only employment law cases (Tippett and Alexander’s substantive area of expertise). We identified these cases by using the following PACER “Nature of Suit” codes: “Civil Rights—employment,” “Labor—Fair Labor Standards Act” (FLSA), and “Labor—Family and Medical Leave” (FMLA).[33]

We chose to study summary judgment briefs and opinions because of their rich factual and legal content, and because the parties at this stage of litigation are incentivized to present their most effective, most thorough, and most skillfully argued positions. Summary judgment is a pivotal stage in a lawsuit, where a judge can dispose of a case in its entirety without trial.[34] Thus, summary judgment motions and their supporting briefs carry high stakes for litigants. One would, therefore, expect summary judgment research and writing—on both the moving and opposing sides—to be the best that the parties’ lawyers have to offer.

To assemble and prepare the SJC, a team of law students downloaded the target briefs and opinions, reviewed each opinion, and coded the outcome of the motion as granted in whole (win), denied in whole (loss), or granted in part and denied in part (partial). The “win” was characterized relative to the moving party, rather than to the plaintiff or defendant.[35]

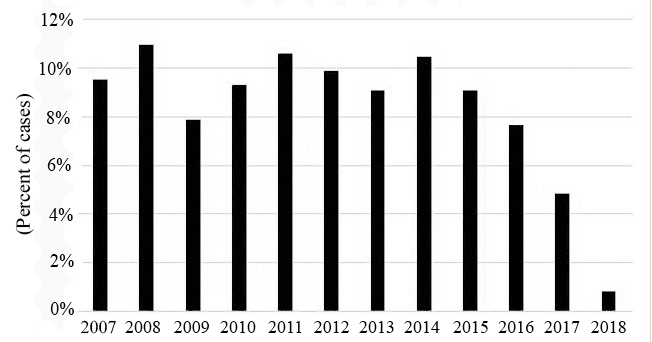

The charts and tables below show the distribution of the 864 cases by year, “Nature of Suit” code, and judicial district, of which there are 94. Eighty-five districts are represented in the data set.

Figure 2: Distribution of Cases by Year

Table 1: Cases by Nature of Suit

| Nature of Suit | Percent of Cases in Data Set |

| Civil Rights – employment | 84% |

| FLSA | 12% |

| FMLA | 4% |

The experiments described here were limited to the 444 cases that included at least an initial brief and an opposition brief (including reply and surreply briefs, if any),[36] and in which the motion for summary judgment was either granted in full or denied in full. Motions that were granted in part and denied in part were excluded, as were cases in which the court ruled on cross-motions within a single opinion. While we could have incorporated these more complex decisions[37] into the machine-learning model, the output would have been more difficult to interpret. We, therefore, organized outcomes according to a binary “win/loss” assumption.

The exclusion of partial wins from the model alters the overall success rate of plaintiffs in summary judgment filings. Plaintiffs rarely file summary judgment motions, and when they do, they tend to target specific causes of action rather than the whole case. This is because the procedural posture of summary judgment strongly favors defendants.

Consider, for example, a religious harassment case under Title VII of the Civil Rights Act of 1964. For a plaintiff to prevail at trial, they would need to persuade a jury to believe their account of the facts as to each element of the case—that they suffered harassment based on their religion that was so severe or pervasive as to alter the terms and conditions of their employment.[38] However, for the plaintiff to prevail on summary judgment, they would need to show that the facts are so overwhelming as to be undisputed as to each element—in other words, that the employer has no facts to dispute the severity or pervasiveness of their harassment, nor the religious motivation for the harassment. If the plaintiff is asserting multiple causes of action, such as a separate claim for retaliatory firing in addition to harassment, they would need to show that there is no factual dispute as to each element of each cause of action in order win the entire case on summary judgment. Consequently, plaintiffs tend not to affirmatively move for summary judgment as to their entire case and may instead seek summary judgment on a single issue or cause of action.

By contrast, the defendant has a much easier burden when moving for summary judgment—the defendant must merely show that the facts are undisputed in the defendant’s favor as to a single element of the plaintiff’s claim. Because the plaintiff ultimately bears the burden of proof as to each element, a fatal defect in any one element of a cause of action justifies a summary judgment ruling in the defendant’s favor on that entire claim. For example, if the harassing conduct that the plaintiff alleges is insufficiently severe or pervasive to meet the legal standard for harassment based on similar cases, the court would grant summary judgment in the defendant’s favor on the harassment claim. Where the defendant is able to successfully challenge the sufficiency of the evidence as to at least one element for each of the plaintiff’s cause(s) of action, the defendant will prevail on summary judgment as to the entire case.

A corpus that excludes partial wins also tends to underestimate plaintiffs’ success at the summary judgment stage because a partial denial of a summary judgment motion brought by the defendant is a partial victory for the plaintiff. This means that the plaintiff will be able to proceed to trial, even with fewer causes of action. Likewise, a plaintiff who only wins in part on their own motion for summary judgment will still have a later opportunity to prove the remaining claims at trial.

Likewise, the exclusion of cross-motions that were decided in a single judicial opinion has a similar effect on results. The presence of cross-motions—where both the plaintiff and the defendant file motions for summary judgment—could signal that both sides believe they have a strong case. The outcome of such disputes may be different from those where only a single party files, which may indicate more lopsided merits, whether in the defendant’s or the plaintiff’s favor.

In summary, the absence of these partial results likely means that the corpus is skewed in favor of defendant-favorable legal cases and outcomes. This skew is visible in the summary statistics for the corpus. In 98% of the cases in this study, the defendant/employer filed the motion for summary judgment,[39] and 76% of those motions were granted.[40] This skew ultimately influenced the methodological approach we used in analyzing the data.

As described further below, many of our analyses used binary machine-learning classifiers. In simple terms, this means that we wrote computer code that “learned” from the textual, stylistic, and citation characteristics of a “training set” of winning and losing briefs and then applied that learning to classify a new “test set” of briefs as winners or losers.[41] How well the classifier did in guessing the correct outcome for the test cases supplies our measure of performance and gives us a window into which features of the briefs were most and least predictive of a summary judgment win.

Researchers have multiple options for measuring classification performance.[42] For example, researchers focused on identifying all positive instances (e.g., positive COVID diagnoses), even at the expense of generating some false positives, may choose to maximize a performance measure called sensitivity.[43] If the costs of a false positive are very high, however, then researchers might focus on another performance measure called specificity.[44] The changing medical advice about routine mammograms for all women at age 40 reflects a concern with false positives, as an abnormal mammogram may lead to costly, painful, stressful, and invasive follow-up medical procedures.[45] Other composite performance measures such as accuracy and the F1 score, or F-statistic, strike different balances between true and false positives and negatives.[46]

In our experimental results in the present study, we evaluate accuracy using yet another measure, the Matthews Correlation Coefficient (MCC).[47] This decision is driven by the substantial imbalance in our data set between granted summary judgment motions (positive instances) and denied summary judgment motions (negative instances). Widely used in machine learning applications in biostatistics, MCC is particularly useful where, as here, the underlying data is highly skewed. We also include the F1 score in the results presented below, as that measure may be more familiar to a computational legal studies readership. An MCC score of 1 is a perfect prediction of a positive relationship; a score of –1 is a perfect prediction of a negative, or inverse, relationship; 0 is a coin flip.[48] Our models, therefore, seek to maximize MCC score. As a starting point, we note that, due to the skew in our data, the MCC score for a model that predicts a brief’s chances of success based solely on whether the brief was filed by the movant or respondent is 0.481 and the frequency-weighted F-measure is 0.740.

B. Analytical Methods

After gathering our relevant brief-opinion sets, we performed several initial text processing tasks, including converting all text from its original .pdf or .docx format into machine-readable .txt format, parsing all text at the sentence level, and tagging all citations to case law within the text.[49] We then performed a series of analyses of citation usage, textual characteristics, and stylistic features of the text, assessing the briefs’ predictive power and testing the conventional wisdom of legal research and writing instruction.

We identified case law citations and string citations within briefs by building “finder” tools that searched for all combinations of numbers, punctuation, and characters that follow Bluebook citation formatting, aided by the list of citation and reporter formats provided by CourtListener.[50] Our analysis did not include citations to statutes, regulations, or the factual record, as such citations did not follow a standardized format, and varied considerably from brief to brief.[51] Parsing string citations to multiple cases, seriatum, proved particularly complex from a computational standpoint, due to the proliferation of periods, quotation marks, parentheses and other punctuation both within and between the citation itself, the parenthetical text, and the preceding sentences.

Separately, our analysis also classified individual citations according to the frequency with which they appeared in the corpus as a whole. This enabled us to differentiate between popular citations and those that appeared infrequently.

We also generated stylistic measures relating to legal writing, such as the use of negative intensifiers and positive intensifiers.[52] Stylistic measures were generated through the construction of custom style dictionaries through a close, qualitative review of a few dozen briefs in the original sample. Noteworthy word choices were grouped into categories and refined through further human review, with reference to the LRW literature. The dictionaries appear in the Appendix.

Our models also tested variables relating to the briefs’ textual characteristics—in particular, the number of documents filed (i.e., whether the parties filed a reply or surreply in addition to an opening brief); number of sentences in the brief; and average sentence length. Lastly, we included a set of control variables: court, nature of suit, and the parties’ pro se status.

III. Results

We begin with a discussion of the role of citations in summary judgment outcomes to better understand the role of legal research in good lawyering. We used multiple approaches to identify the use and efficacy of citations. First, we examined the role of the citation count per brief in predicting outcomes. Second, we tested the relationship between outcomes and the specific patterns of citations present in each brief. Finally, we used network analysis, also known as graph analysis, to identify case citations that were common among briefs in the corpus. This methodology allowed us to test whether outcomes for a brief could be predicted based on the success of briefs in the same “neighborhood,” or cluster, that cited to the same cases. We also used graph analysis to evaluate how well a brief connected to the larger body of case law within the corpus—was a brief citing many cases that were commonly cited by other briefs or was it citing to cases rarely cited by others?

We then attempted to understand the role of legal writing by testing the predictive power of various textual characteristics and stylistic features using machine-learning methodologies.

A. Citation Count

First, we modeled summary judgment wins[53] as a function of a variety of textual, stylistic, and citation-related features of the briefs. We discuss the textual and style-related results further below.[54] Our simplest approach to citation analysis was merely to count them: How many citations appeared per brief? These frequencies may serve as a rough proxy for the intensity of lawyering effort or the availability of lawyering resources. In a related analysis, we also counted the total number of documents filed in connection with each brief.

Summary judgment motion practice proceeds in multiple rounds, with an opening brief by the moving party, an opposition by the respondent, a reply by the movant, and an optional surreply (allowed at the judge’s discretion) by the respondent. Like citation frequency, the number of documents filed in connection with each brief might be an indication of the intensity of lawyering effort and/or available resources.

We found in our experiments that citation and document count, depending on the particular classification model and methodology employed, were consistently among the top predictive features of summary judgment outcome. We return to the implications of this finding in subsequent parts.

B. Citation Patterns

Beyond raw citation counts, we also performed a series of analyses based on which cases were cited and patterns of citations within the corpus. We first created citation frequency vectors that captured the number of times all citations appeared per brief.[55] For example, imagine that Brief B1 cited Case C1 twice, Case C2 once, and Case C3 twice. Brief B2 cited Case C1 zero times, Case C2 zero times, Case C3 three times, and Case C4 one time. The two briefs’ citation frequency vectors would look like the rows in Table 2:

Table 2: Illustration of Citation Frequency Vectors

| Brief | Citation Counts | |||

| Case C1 | Case C2 | Case C3 | Case C4 | |

| B1 | 2 | 1 | 2 | 0 |

| B2 | 0 | 0 | 3 | 1 |

Vectorizing citation frequency thus captures multiple dimensions of citation usage: the number of unique cases cited per brief, their frequency, and the overlap between briefs’ citation patterns.[56]

As Table 3 below shows, any given brief’s particular citation frequency vector was only modestly predictive of a summary judgment win. In other words, knowing the particular combination of cites present in a brief allows one to predict the brief’s success better than a coin flip, but only slightly. Citation frequency vectors were better at predicting whether a brief was filed by the plaintiff or defendant (MCC = 0.420, F1 = 0.690) and whether the brief was written by the movant or the respondent (MCC = 0.450, F1 = 0.694).[57] In other words, the cases parties cite depend on where they sit: plaintiffs tend to cite to a common set of cases, while defendants cite to their own common set of cases.

However, we also identified a subset of citations that, when present in a brief’s citation frequency vector, increased the probability of summary judgment win. We include the presence of the top 100 of these “information gain” citations in Table 3, associated with a near-tripling of the MCC score. Information gain here refers to the contribution of those particular features, or variables, to the predictive performance of the model.[58]

Table 3: Performance in Win/Loss Prediction Based on

Citation Frequency Vectors[59]

| Feature | MCC | F1 Score |

| Citation frequency vectors | 0.152 | 0.563 |

| The 100 highest information gain citations | 0.401 | 0.611 |

Interestingly, the top information gain cases were not exclusively, or even predominantly, U.S. Supreme Court cases. Instead, they appear to consist of circuit court cases that stand in for a particular type of fact pattern. This squares with intuition. Many, if not most, briefs that we reviewed contained boilerplate recitations of the summary judgment standard, citing civil procedure hornbook Supreme Court cases like Celotex Corp. v. Catrett.[60] Additionally, many briefs cited Supreme Court cases that establish the process for judicial analysis of the particular case type at hand, e.g., the burden shifting framework applicable to employment discrimination claims set out in McDonnell Douglas Corp. v. Green.[61] The popularity of these staple Supreme Court citations throughout the corpus means that their presence in any given brief would be unlikely to predict outcome.[62] Instead, the citations that were the most information-rich were cases from the country’s appellate courts.

By way of illustration, the top fifteen information gain cases are listed in Table 4 below.

Table 4: Most Predictive Cases in Corpus[63]

| Case Name |

| Elrod v. Sears, Roebuck & Co., 939 F.2d 1466 (11th Cir. 1991) |

| Hawkins v. Pepsico, 203 F.3d 274 (4th Cir. 2000) |

| Waldridge v. American Hoechst Corp., 24 F.3d 918 (7th Cir. 1994) |

| Causey v. Balog, 162 F.3d 795 (4th Cir. 1998) |

| Mendoza v. Borden, Inc., 195 F.3d 1238 (11th Cir. 1999) |

| Wascura v. City of South Miami, 257 F.3d 1238 (11th Cir. 2001) |

| Baldwin County Welcome Center v. Brown, 466 U.S. 147 (1984) |

| Knight v. Baptist Hospital of Miami, Inc., 330 F.3d 1313 (11th Cir. 2003) |

| Smith v. Lockheed–Martin Corp., 644 F.3d 1321 (11th Cir. 2011) |

| Bodenheimer v. PPG Industries, 5 F.3d 955 (5th Cir. 1993) |

| Lynn v. Deaconess Medical Center–West Campus, 160 F.3d 484 (8th Cir. 1998) |

| Rice–Lamar v. City of Ft. Lauderdale, 232 F.3d 836 (11th Cir. 2000) |

| Charbonnages de France v. Smith, 597 F.2d 406 (4th Cir. 1979) |

| Clemons v. Dougherty County, 684 F.2d 1365 (11th Cir. 1982) |

| Steger v. General Electric Co., 318 F.3d 1066 (11th Cir. 2003) |

| United Mine Workers v. Gibbs, 86 S. Ct. 1130 (1966) |

Notably, these cases are predominantly from the more conservative Eleventh and Fourth Circuits, and many were decided twenty or thirty years ago. The liberal Ninth Circuit does not appear on the list. Since our corpus was drawn from cases all over the country, this suggests that precedent from conservative circuits may be especially influential in summary judgment cases. This result may be partly attributable to the skewed nature of the corpus, which did not include the plaintiff-favorable partial rulings or cross motions decided in a single opinion.

Our review of these cases also suggests that almost all tended to stand for a very narrow set of facts and law that would justify disposing of a case on summary judgment.[64] For example, the case at the top of the list—Elrod v. Sears, Roebuck & Co.[65]—holds that an employer’s truly held belief that an employee engaged in harassment or retaliation can be a legitimate non-discriminatory basis for firing that individual, regardless of whether the harassment occurred.[66] The second case on the list—Hawkins v. PepsiCo[67]—holds that a personality conflict between a supervisor and a subordinate can be a legitimate non-discriminatory reason for a termination.[68] The third case—Waldridge v. American Hoechst[69]—involved a plaintiff who had submitted a bare-bones Statement of Genuine Issues that did not “identify with specificity what factual issues were disputed, let alone supply the requisite citations to the evidentiary record.”[70] This failure was a sufficient basis for granting summary judgment.

Nearly all of the top fifteen cases would have been helpful for an employer seeking summary judgment, which is unsurprising given the skewed nature of the corpus, in which employer-favorable rulings predominate. These cases tend to tip the balance in the employer’s favor, provided the employer can show that the facts of its own case are comparable to those in the cited case. Indeed, a case that cites Hawkins v. PepsiCo could suggest that the employer has a strong defense because the fact pattern resembles that in Hawkins. At the same time, a case that cites Hawkins may also be a mark of strong legal research, that the lawyer had sufficient command of the jurisprudence or resources and time to locate these narrow cases with factual parallels that favor their client’s case.

Only two Supreme Court cases appear on the list of the top fifteen most influential cases—Baldwin County Welcome Center v. Brown[71] and United Mine Workers v. Gibbs.[72] Both cases are somewhat obscure and are not commonly covered in employment law courses, nor do they contain boilerplate language on the summary judgment or burden shifting standards. This does not suggest, however, that lawyers should give up on citing Supreme Court jurisprudence. In a related finding in subpart III(C) below, briefs fared better when they cited to other cases commonly cited by others. This suggests that, while briefs should not only cite information-poor, commonly cited Supreme Court cases, neither should they cite only idiosyncratic, rarely used precedent. These results are discussed further below.

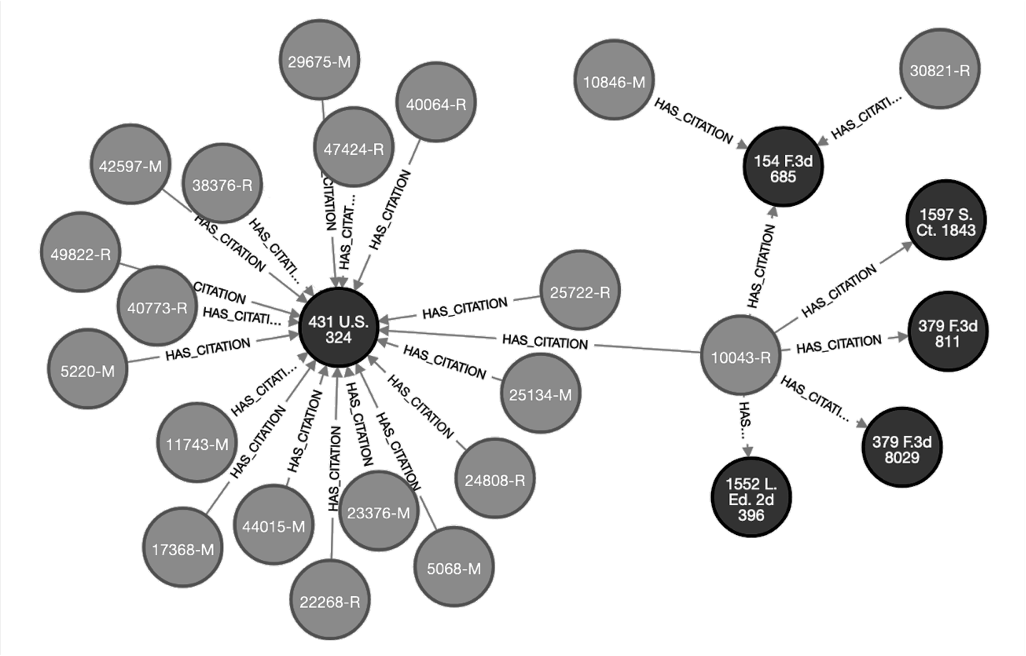

C. Graph Analysis

Our final exploration of citations’ predictive power employed graph analysis, also known as network analysis,[73] to illustrate and analyze shared citations among the network of briefs. This approach is similar to techniques used to map the citations among Supreme Court decisions over time,[74] and within statutes and regulations.[75]

Figure 3 below represents each brief as a gray circle and each cited case as a black circle. An “M” or “R” in a gray circle indicates whether the brief was filed by a movant or respondent on summary judgment.

Figure 3: Citation Graph Excerpt, Brief-Citation Network

The citation graph provides a visual representation of commonly and less commonly cited precedent. In Figure 2, for example, many briefs cite to International Brotherhood of Teamsters v. United States[76] (the black circle in the middle), while only a few briefs cite to Hendricks-Robinson v. Excel Corporation.[77]

This network-based model enables us to make predictions about the probability of success of a particular brief, based on the frequency with which other briefs cite to the same precedent, and based on the success of those other citing briefs. A network-based model allows us to test two hypotheses. First, do briefs succeed by citing the same cases as other briefs, or by citing unusual cases not commonly cited by others? Second, this model allows us to test a predictive model that clusters cases together according to common citations. To the extent such clusters reflect common legal issues and common fact patterns, it might be possible to predict the success of a particular brief based on the success rates of other briefs within that cluster and nearby clusters.

For each brief in our graph, we derived the following predictive measures based on the characteristics of the citations within the brief and of other briefs that cite to the same cases:

Cluster Win Probability (M or R). The success rate of similar briefs, according to shared citations. Clusters include all briefs of the same type (movant or respondent) connected through a common citation. As illustrated in Figure 3, the value for this variable for brief 25722-R would average the win rate for all other “R” briefs within the Teamsters cluster.

Brief Cite Count. The number of citations in the brief.

Brief Cite Popularity. The popularity of each citation in a brief. In other words, the number of other briefs that cite to each citation in a brief.[78]

Opposing Party Shared Cites. The number of citations shared with the brief of the opposing party.

Brief Cite Win Score. The win rate of other briefs that cite to a particular case. This variable calculates the average “win score” for all citations in the brief.

Table 5 below shows the information gain—or relative contribution to the model’s predictive performance—from each of the graph features in win prediction. Entries of zero represent zero information gain. As Table 5 shows, the most predictive feature of the graph was Brief Cite Win Score, which captures the extent to which all cases cited in any given brief were also cited in other winning briefs. The next-most important graph features captured other aspects of shared-winningness: Cluster Win Probability (M or R). These variables measured the winningness of each brief’s network “neighborhood,” or cluster of briefs defined by the presence of at least one citation in common. Here again, the graph suggests that winning briefs share common citations, and that good lawyering, to some extent, may boil down to the ability to identify winning citations to precedent.

Table 5: Win Prediction Information Gain of Figure 3 Graph Features

| Graph Feature | MCC |

| Brief Cite Win Score | 0.207 |

| Cluster Win Probability (M or R) | 0.179 |

| Brief Cite Count | 0.035 |

| Opposing Party Shared Cites | 0.022 |

| Brief Cite Popularity | 0 |

Table 6, in turn, shows win prediction performance on the highest information gain features for combinations of the above listed variables. Here, we experimented with various combinations of graph features, and present the most predictive combinations for all briefs and for movants and respondents separately.

Table 6: Win Prediction Information Gain of Figure 3 Graph Features, Variable Combinations[79]

| Brief Type | Graph Features | MCC | F1 Score |

| All | Brief Cite Win Score, Opposing Party Shared Cites,

Cluster Win Probability (M or R), Brief Cite Counts |

0.477 | 0.742 |

| Respondent | Brief Cite Win Score, Opposing Party Shared Cites | 0.189 | 0.664 |

| Movant | Brief Cite Win Score, Opposing Party Shared Cites | 0.177 | 0.715 |

As Table 6 shows, the cumulative prediction rate for the most predictive group of network variables (MCC = 0.477), computed for all briefs (M and R), is comparable to the baseline prediction value based on party alone (MCC = 0.481). In other words, an algorithm that is blind to movant/respondent and plaintiff/defendant identifiers would be able to predict summary judgment outcomes with approximately the same success based on the various shared citation features generated by our graph analysis. This finding reinforces our earlier suggestion about the importance of research and citation selection among lawyering skills.

The “Respondent” and “Movant” rows in Table 6 offer more interesting insight. Here, the MCC values record the model’s success in predicting summary judgment outcomes above the baseline predictive power of party identity alone. The two variables that were most predictive, for both movants and respondents, were Brief Cite Win Score and Opposing Party Shared Cites.

This is particularly informative for respondents who, as noted above, tend to lose on summary judgment.[80] The graph analysis suggests that respondents whose citations mirror their opponents’ (movants’) citations, and whose citations also appear frequently in granted summary judgment briefs (i.e., the outcomes that are bad for respondents), tend to fare better on summary judgment. This highlights the value of defensive lawyering: respondents should not just make their own arguments, supported by their own sets of citations to respondent-favorable precedent. Instead, the analysis suggests that they should engage directly and substantially with the body of case law upon which their opponents rely.

Yet again, this strategy privileges lawyers and clients with sufficient time, staffing, and access to legal research resources to engage with their opponents’ citations and construct arguments in response. As discussed in greater detail in Part V below, these results suggest that network-based features could serve as useful predictors for the relative merits of a particular brief and could serve as the basis for an open-access computationally enabled brief bank or citation identifier to increase access to justice.

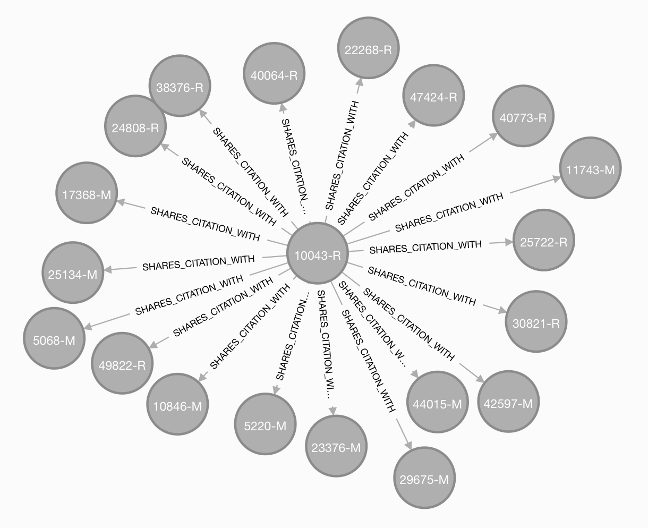

Our final set of analyses examined the degree of connectedness between citations in a given brief and the citations in other briefs. This method is similar to our approach illustrated in Figure 3 and Tables 5 and 6. However, rather than presenting briefs as “spokes” with common citations as “hubs,” Figure 4 below directly connects briefs to each other through shared citations, focusing on the many briefs that share citations with a single brief, 10043-R. For example, the moving brief “10846-M” (in the bottom left corner of the graph) shares one or more citations with 10043-R. Although the excerpt in Figure 4 is too small to reveal the extent of overlap in citations between 10846-M and other briefs, a brief with few spokes connecting it to other briefs would be more isolated within the network. The most isolated briefs had zero shared citations with other briefs (singletons). Only eleven briefs in the corpus were singletons.

Figure 4: Citation Graph Excerpt, Brief-Brief Network

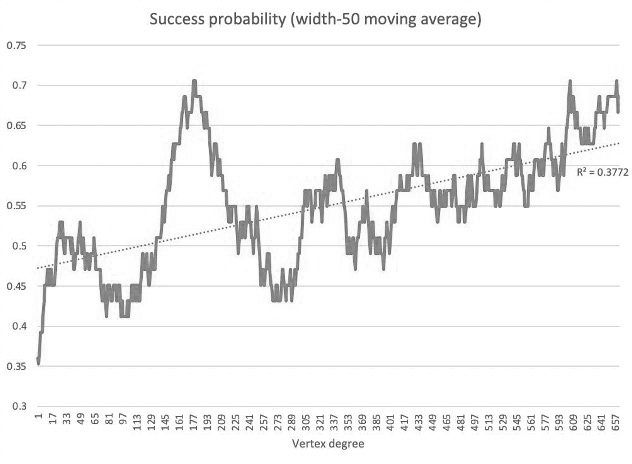

We then used this degree of shared citation measure as a predictor of summary judgment success. Notably, all of the singleton briefs with no shared cites were losing briefs. We visualize the probability of success based on shared citations in Figure 5, where the vertical axis captures a brief’s likelihood of success and the horizontal axis captures the number of other briefs having at least one citation in common with that brief. The upward slope of the line from left to right indicates a positive relationship between the two, such that the greater the number of shared citations, the greater that brief’s likelihood of success.

Figure 5: Mean Likelihood of Success as a Function of Shared Citations

The relationship between success and vertex degree is particularly strong near the top of the scale—that is, the briefs citing many authorities that are cited by others. These results suggest, at the very least, that it is a poor strategy to exclusively cite unusual precedent in a brief, while those that cite to a common body of law tend to fare better. We return to the implications of these findings, and their connections to access to justice, further in Part V below.

D. Prediction from Textual Characteristics and Stylistic Features

We now move from legal research to legal writing. In addition to our analyses of citations as predictors of summary judgment outcomes, we tested the predictive effect of multiple textual characteristics, such as sentence count and length, and stylistic features, measured via the set of dictionaries available in the Appendix. Table 7 below lists the information gain of nine textual and stylistic variables. Note that the previously discussed party identity, document count, and citation count variables were more predictive than the features discussed here; the list shown in Table 7 picks up that set of features. Table 7 also omits the controls that we included in the model for court, case, and party characteristics, none of which improved predictive performance.

Table 7: Win Prediction Information Gain of Textual Characteristics

and Stylistic Features

| Feature | MCC |

| Hedging | 0.061 |

| Positive intensifiers | 0.056 |

| Sentence count | 0.056 |

| Negative intensifiers | 0.055 |

| Repetition | 0.046 |

| Total string cites | 0.044 |

| Mean string cite length | 0.032 |

| Mean sentence length | 0 |

| Negative emotional state | 0 |

In interpreting these results, we draw from the legal research and writing literature—that is, scholarship by law faculty who specialize in legal research and writing. These scholars serve as the source of received wisdom about effective legal writing. Throughout, however, we note the relatively greater importance of citations in predicting summary judgment outcomes—suggesting that regardless of a lawyer’s skill as a wordsmith, their skill as a researcher, or the research resources they have available, citations appear to be more important in securing a victory on summary judgment.

1. Hedging (e.g., “however,” “albeit,” “regardless,” “nevertheless,” “while”).—

Hedging was the most informative textual or stylistic feature in our analysis. Legal writing scholars devote substantial attention to the question of how best to handle problematic facts and case law. In general, they advise against ignoring bad law—indeed, lawyers have an ethical obligation to cite unfavorable controlling law.[81] Instead, they offer various strategies for handling bad facts, such as “address[ing] unfavorable facts more quickly and in less detail”[82] or “pairing that unfavorable fact with a more positive fact.”[83] Rocklin and her coauthors argue that unfavorable case law presents an opportunity for lawyers to “explain why, despite anything opposing counsel might say, you still win.”[84]

In our qualitative review, we observed that hedging words tended to be used when a lawyer was attempting to address a problematic aspect of the case by acknowledging the issue but noting the presence of a contrasting fact or legal authority. Thus, the presence of hedging words could signal skillful lawyering. This result also aligns with the graph analysis above, where respondents’ briefs that shared citations with movants’ briefs helped improve those briefs’ prospects. Taken together, these findings support the LRW advice to address bad or opposing legal propositions directly rather than merely stating one’s own affirmative case.

2. Positive Intensifiers (e.g., “conclusory,” “erratic,” “hastily,” “unmistakable,” “misplaced”).—

The presence of positive intensifiers in our corpus was also aligned with greater summary judgment success. We borrow the term “positive intensifier” from Beazley, who counsels using positive intensifiers instead of negative ones.[85] Beazley argues that positive intensifiers are distinct for their precision—that they are followed by more “concrete information” about what is wrong with the other side’s argument.[86]

Our dictionary of positive intensifiers does not exactly match Beazley’s, although there is some overlap. In our qualitative review, the use of positive intensifiers tended to be associated with high quality writing in a brief. While we were hard pressed to draw a clear distinction in meaning between negative and positive intensifiers identified in the LRW literature, we noted that positive intensifiers tended to be somewhat more muted. Thus, we classified the word “unmistakable” as a positive intensifier, though its meaning is not so different from the dreaded “clearly,” which, along with “obviously,” LRW texts advise strongly against. The positive intensifiers in our dictionary also tended to assign fewer negative attributes to the opposing lawyer or party (“misplaced” vs. “disingenuous,” “erroneous” vs. “illogical,” “obfuscate” vs. “cover up”).

It may be that the selective use of positive intensifiers, though hardly different in meaning from negative intensifiers, reflects a style of writing preferred by judges. It is also possible that positive intensifiers signal underlying merit. Lawyers who are confident of their case, for example, may feel no need to overstate and instead may use more muted language when the facts and law speak for themselves. Likewise, a lawyer who is truly confident about her case may feel no need to cast aspersions on the other side’s motives and may instead characterize the other side’s position as merely mistaken. Our modeling results confirm, though modestly, these propositions.

3. Sentence Count and Mean Length.—

Legal writing scholars provide nuanced advice with respect to length.[87] In the context of writing a statement of facts, for example, Rocklin and her coauthors note that the length should be driven by the complexity of the fact pattern.[88] Calleros, in his guidebook to legal writing, advises that writers “must strike an effective balance between light and in-depth analyses to achieve the dual goals of clarity and concision.”[89] Scholars encourage authors to strike a balance between low-level and complex analysis. While light analysis is often insufficient, “in-depth analysis . . . may cause the reader to grow weary and to lose sight of the general legal theory.”[90]

With respect to sentence length, Beazley suggests variety: “One short sentence can be effective. More than two short sentences are not.”[91] Rocklin advises editing with an eye toward “unwieldy” and “overly long” sentences” with “too many embedded ideas” or “too many empty words.”[92] McAlpin advises against sentences that are more than 25 words long.[93] Oates, Enquist, and Kunsch argue that sentence length depends on the reader and the context, but generally caution against “overly long sentence[s].”[94]

Our predictive models include two variables relating to length. We include a variable for the number of sentences within a brief—which was a proxy for the length of the brief as a whole. We also include a variable for the average length of sentences. While this variable would pick up briefs where lengthy sentences proliferate, it would not be able to detect the difference between a brief with average-length sentences and one with a mix of very short and very long sentences.

As Table 7 shows, the number of sentences per brief was approximately as predictive of a summary judgment win as the presence of positive intensifiers—that is to say, moderately so. Mean sentence length, on the other hand, produced no information gain, neither confirming nor disproving the LRW literature’s advice.

4. Negative Intensifiers (e.g., “frivolous,” “obviously,” “clearly,” “unfounded,” “whatsoever,” “woefully”).—

The term “negative intensifier” comes from LRW scholar Mary Beth Beazley[95] and refers to words that tend to overstate one’s case or denigrate the other side. Other legal writing scholars likewise advise against hyperbole—Rocklin advocates for “striv[ing] for a tone that suggests objectivity even while presenting the facts from your client’s perspective.”[96]

In our qualitative review of briefs, we observed a particular prevalence of negative intensifiers in briefs that were poorly written. We also observed that negative intensifiers tended to be used in personal attacks against the opposing side, a tactic that legal writing scholars discourage.[97] We, therefore, use negative intensifiers as a proxy for poor writing. At the same time, it is possible that the use of negative intensifiers could signal the underlying merits of a case in either direction. Lawyers might use extreme language when the facts are extreme. Alternatively, they might use extreme language to cover up factual gaps and weaknesses—a signal that these lawyers “doth protest too much.”[98]

Negative intensifiers may also affect outcomes independent of the merits. Such language may be particularly irksome to judges, who in one survey singled out the word “clearly” as a particular dislike in briefs.[99] Judicial irritation may thus have an independent effect on case outcomes.

Here, we observe that the presence of positive intensifiers is more heavily associated with summary judgment success than the presence of negative intensifiers, lending support to the weight of LRW advice. However, the difference between the information gain associated with the two intensifier types is small, suggesting that, on the whole, intensifiers are helpful, and positive intensifiers only slightly more so than negative.

5. Repetition (e.g., “again,” “also,” “many,” “repeatedly,” “both,” “neither”).—

Legal writing scholars take a nuanced position with respect to repetition in legal briefs. Oates, Enquist, and Kunsch, for example, note that “[i]n the United States legal culture, conciseness is even more highly prized as lawyers fight to keep their heads above the paper flood.”[100] However, they also note the usefulness of introductions, conclusions, and mini-conclusions—which serve as a form of repetition.[101] They also note the persuasive power of subtle repetition, using slightly different wording to reinforce a theme or theory of the case.[102]

We constructed the repetition dictionary to contain words that tended to preface a repeated reference to a particular fact or that served to highlight a pattern of conduct or related facts. We hypothesized that repetition words might signal a somewhat stronger case through related facts and legal arguments that support a trend. However, like intensifiers, the converse may be true—that the use of repetition words signals excess verbiage and mindless emphasis that could have been removed through more aggressive editing.

Our results place repetition words near the bottom with respect to information gain, suggesting that this particular lawyering strategy may only be marginally effective.

6. String Citations (total and mean length).—

String citations consist of multiple citations in a row, often with a parenthetical describing the relevant facts or legal proposition. Rocklin and her coauthors argue that string citations are useful for proving the presence of a legal trend.[103] However, they caution that string cites are “difficult to read” because they “create a long block of text” that “readers tend to skip over.”[104] They also note that string cites can be a waste of space if the cites relate to a generally accepted proposition of law.[105]

String cites do not have universal approval within the legal writing literature. Mary Beth Beazley cautions against string citations, arguing “[j]udges are almost uniformly against the use of string citations.”[106] Beazley also suggests that the parenthetical text separating string cites are fraught with peril, noting that “ineffective parentheticals tend to give the reader a snippet of information, but not enough to make the case useful to the reader.”[107] By contrast, Garner argues that string cites are “relatively harmless” once they have been moved to the footnotes.[108]

We include two variables relating to string cites in our predictive models. One variable counts the number of string cites, where each set of string cites counts as one cite. Another variable counts the average number of cited cases within a string cite to test the proposition that long string cites are generally unhelpful.

Our results suggest a saturation point: string cites themselves were helpful in predicting summary judgment wins, but only to a point. Confirming LRW advice, lengthy string cites containing five or more citations tended to detract from a brief’s prospects.

7. Negative Emotional State (e.g., “upset,” “scared,” “threatened,” “cried”).—

Rocklin and her coauthors advise lawyers to use “emotional facts” judiciously, characterizing them as “a kind of background that cause a reader to feel positively toward one party or negatively toward another.”[109]

The use of words describing an individual’s negative emotional state were relatively rare within the subsample of briefs used to generate the dictionaries. However, this negative-emotional-state language tended to appear in briefs describing particularly aggravated fact patterns. Although some of this usage could reflect rhetorical flourishes or exaggeration by lawyers, it may also signal the underlying merits of the dispute.

Here, our results showed no information gain from the inclusion of negative-emotional-state language, though our analysis could not distinguish between language that a reader might deem excessive or hyperbolic and language that accurately describes underlying fact patterns that themselves are quite negative or extreme.

8. Controls.—

Finally, we note that none of the control variables had a meaningful effect in our analyses. This finding was somewhat surprising, particularly with respect to the pro se variable, which we expected to be highly predictive of summary judgment losses. However, it is likely that the effect of pro se status—and in particular the resource disadvantages associated with pro se status—was captured through other variables. Pro se litigants, for example, may not cite to case law; know about filing reply briefs; or be exposed to the kind of legal-writing training that urges the use of positive identifiers.

In fact, all our style and citation variables were negatively correlated with pro se status, and the largest differences between pro se and represented parties were in the variables that capture aspects of citation usage. These findings align with our more general conclusion about the primacy of legal research in effective lawyering and highlight the need for open-access solutions like the one we propose in the Part that follows.

IV. Discussion

Broadly speaking, our results suggest that lawyering matters, and more intensive lawyering pays dividends. Filing additional briefs improves a client’s prospects, and longer briefs fare slightly better. More citations help, particularly where lawyers rely on a common set of cases widely cited by winning briefs. The results further suggest that merely dumping those citations into lengthy string cites does not help. Instead, careful framing of the law and facts through emphasizing the strong points (i.e., the use of positive intensifiers) and addressing weak points (i.e., hedging) makes a difference. In addition, thorough research that leads a lawyer to uncover a compelling case with similar facts (such as one of the highest “information gain” cases) could tip the scales toward a favorable outcome.

Notably, many of our findings parallel the results of a 2013 study by Professor Scott Moss of plaintiffs’ briefs filed in opposition to summary judgment in employment discrimination cases in which defendants asserted a particular defense—the “same actor” defense. There, Professor Moss reviewed 102 plaintiffs’ briefs and found that more than ten percent displayed “no research tailored to the case. . . [or] only one or very few on-point citations.”[110] On the basis of this and other observations, Professor Moss concluded that brief quality, including the quality of citations, was a good predictor of plaintiff loss on summary judgment.[111]

To be sure, some of the results of both our study and Professor Moss’s study could be traced to the underlying merits of the dispute.[112] As previously noted, lawyers may find it easier to use positive intensifiers when they have a strong case. A lawyer defending a company in an employment case may only be in the position to cite one of the top fifteen “information gain” cases if she has facts that line up with that case, which may furnish a strong defense on their own. And if an underlying case is strong, there may be more favorable case law to add to the brief.

In addition, the results are somewhat reflective of the imbalanced corpus, particularly the exclusion of motions that were only partially granted (an outcome that is generally favorable to the plaintiff). Most cases in the corpus were rulings in favor of the employer, meaning that the stylistic- and citation-based predictors of success were characteristic of the general approach that defense counsel tend to take in employment litigation.

Nevertheless, our results are in line with broader literature regarding access to justice. Our study suggests that clients benefit from well-resourced lawyers who can draw their citations from an array of treatises, legal databases, and even internal brief banks to copy and paste legal arguments from similar factual settings. By contrast, a pro se party or a harried plaintiffs’ lawyer, solo practitioner, or legal aid attorney with a large caseload may be limited in the results she can achieve if she lacks the time and resources to submit a well-researched surreply or to draft a comprehensive opposition brief that takes on the movant’s authority citation by citation. Limited access to legal databases or treatises means she might miss a helpful case or include fewer total citations.[113]

At the same time, our results suggest promising avenues for improving access to justice. In particular, the graph-analysis results suggest that it would be possible to create an open-access, computationally enabled brief bank or citation recommendation tool. Recall that the graph analysis enabled us to predict a brief’s prospects based on the success of other briefs that cited the same cases. Using similar methodology, it would be possible to radically reduce the amount of legal research needed to identify relevant citations in drafting a summary judgment brief at any stage of motion practice.

A plaintiff’s lawyer could, for example, scan a defendant’s opening summary judgment brief into the recommendation tool. The tool would extract the citations and analyze where they sit with respect to other briefs in the corpus. Through the use of graph analysis, the tool would identify the cases most commonly cited in opposition to those cited in the defendant’s brief, i.e., those cases that would be candidates for the plaintiff lawyer’s own opposition brief. The tool would then rank those opposition cites according to their “win rate”—that is, how often other briefs citing that case prevailed in opposing summary judgment. The tool could then provide a ranked list of cases to include in an opposition brief and a set of winning sample briefs.

More sophisticated versions of such a tool could recommend accompanying text based on arguments mined from other briefs, similar to the “how cited” function in Google Scholar.[114] Citations could also be grouped by their proximity in briefs or their proximity in networks, which would likely correspond to related legal topics. These groupings could then serve as a basis for automated brief building.

Beyond identifying strategically useful cases, such a tool could also identify the “bedrock” cases that are most frequently cited in a particular factual setting or for a particular legal proposition. Although these popular cases on their own would not provide much “information gain,” citation to a common foundation of case law favors a party’s prospects in the aggregate. Thus, a brief builder tool that notes the generic cases cited for similar cases of that sort, presented alongside the boilerplate language that typically accompanies those citations, could also be useful in leveling the playing field between well-resourced and less-resourced lawyers.

Citation recommendation tools already exist to some degree—for example, through the legal tech startup, Casetext. Although Casetext uses artificial intelligence to make citation recommendations, the algorithm appears to combine case information extracted from briefs with search terms from the lawyer.[115] It does not appear to make use of network or graph analysis, which also leverages win and loss information. Other legal startups have also attempted to use machine learning to enhance and simplify legal tasks; however, most efforts appear directed at automating the contracting process rather than brief writing.[116] Likewise, writing software such as Grammarly and Briefcatch tend to be focused on grammatical errors and readability.[117] Other software seeks to automate tasks that would normally be performed by a paralegal or legal assistant such as formatting citations, keyciting authority, or generating tables.[118] Harnessing the power of these sorts of solutions, but focusing that power on the resource-intensive task of citation identification, holds real promise for access to justice efforts.

However, and this is a major “however,” a free or low-cost access to justice tool of this sort would only be as robust as the corpus of text from which it draws its conclusions. While large private legal research companies such as Westlaw, LexisNexis, and Bloomberg Law, sitting on mountains of briefs and court opinions, could readily construct such a tool for their paying clients, no similar bulk corpus of court documents is freely available. As previously noted, the federal court document repository, PACER, is expensive to access, does not enable easy identification of the briefs that go with each judge’s opinion, and is not set up for bulk downloads of brief-opinion sets. State courts have no single equivalent system to PACER, and the public availability of trial-level state court documents in electronic form varies wildly by jurisdiction.[119] Likewise, although semi-public resources, such as Google Scholar, are useful for conducting searches, their terms of service do not permit data scraping. In other words, as is increasingly true across domains, access to data serves as the primary differentiator between established industry players and researchers or advocates attempting to create tools for public purposes.

We note that Congress periodically considers making PACER free, which would be a substantial step in the right direction, as a complete corpus of brief-opinion sets would then be available as the raw material for graph and other predictive analyses like those described in this Essay.[120] The federal judiciary has opposed these moves, largely on the ground that their budget would suffer from the lack of PACER fee revenues.[121] Yet underfunded courts should not build paywalls around court documents as a way to generate revenue, as those paywalls—as our research suggest—merely reinforce existing resource disparities and block low-resource litigants from accessing justice.

Finally, we acknowledge that, like any algorithmic approach to decision-making, such a tool could have the effect of further reinforcing the influence of old case law while creating a lag in adapting to new fact patterns, legal theories, and judicial interpretations.[122] Presumably, older—and in our case, employer-favorable—case law would continue to be recommended by the algorithm as “winning” citations, while newer case law would take additional time to become established within the dataset. The algorithm might further cement and reinforce the influence of problematic but “winning” case law, similar to the winner-take-all tendency of other recommendation algorithms in other contexts, such as Spotify.[123] Lawyers who rely exclusively on such an algorithm without performing additional research of their own would risk missing new case law entirely and further delay the introduction of new case law into the algorithm. Such a pattern might result in the “ossification” of areas of law that would otherwise grow and develop in our common law system, as well as less space for cause-driven test cases that are designed to push past the boundaries of existing precedent.[124] Nevertheless, the algorithm could be tweaked, for example, to highlight and accelerate the introduction of new case law by tagging such cases for lawyers as “new” or “trending.”

In sum, to answer the question posed by our title, lawyering appears to matter a great deal. The sort of lawyering that matters the most—skilled legal research and effective use of citations—is costly and may be out of reach to most litigants. Open access solutions like the one we propose here could go a long way to level the playing field, but only if we as a society recognize access to court documents and data, like access to a good lawyer, as a key component of access to justice.

Conclusion

Lawyering—and more to the point, intensive lawyering—can drive the results in summary judgment rulings. Both legal research and stylistic decisions play a measurable role in legal outcomes. Our methodology suggests that a brief’s citations can be used to forecast its prospects based on the success of other briefs sharing those citations. The same methodology could also be used to recommend citations, which could save substantial research time and serve to level the playing field between clients who can afford intensive lawyering and those who cannot. Such a tool, however, could

most easily be constructed by large private legal research companies that already have access to giant corpora of briefs and court rulings, and would likely charge a substantial premium for access. Leveling the playing field would require making open access to briefs and court decisions available throughout our justice system.

Appendix—Stylistic Dictionaries[125]

Hedging—albeit, although, assuming arguendo, belie, even after, even assuming, even if, even though, even without, for the sake of argument, hardly, however, in any event, in response to, in spite of, nevertheless, nonetheless, notwithstanding, of no moment, rather, regardless, undermine, while, with respect to, yet

Negative Intensifiers—abandon, absolute, absurd, artificial, axiomatic, baseless, blatant, boldly, bootstrap, clear, complete absence, completely, conclusively, cover up, cover-up, critically, defective, disingenuous, egregious, epitome, fabricated, false, flimsy, frivolous, futile, futility, illogical, impossible, improper, impugn, inflate, invalid, lack merit, let alone, manifest, mere, mystery, no effort, obvious, patently, plainly, salvage, sandbag, simply, speculative, stark, totally, transparent, unfounded, unquestionably, vain, whatsoever, without question, woefully

Positive Intensifiers—conclusory, critical, erratic, erroneous, even, excuse, fatal flaw, faulty, hastily, inadequate, inconsistencies, indisputably, irrelevant, littered, misplaced, misrepresentation, never, obfuscate, only, overwhelming, remotely, shred, unacceptably, uncorroborated, unmistakable, unsubstantiated, unsupported

Repetition—additional, again, all, already, also, always, both, commonly, consistently, continue, daily, each, ever, expectation, expected, finally, frequent, generally, kept, maintain, many, monthly, most of the time, neither, never, nor, normally, not only, numerous, on more than one occasion, ongoing, permanently, practice, predominantly, previous, prior, repeated, routine, same, second, several, sometimes, standard, still, third, times, twice, unrelenting, weekly, yet again, uniform

Negative Emotional State—upset, scared, threaten, afraid, cried, upsetting, ill, horrify, severe, unrelenting, fear/ed, frighten, distress, tired of, unhappy, concerned, uncomfortable, agitated

- .See, e.g., Victor D. Quintanilla, Rachel A. Allen & Edward R. Hirt, The Signaling Effect of Pro se Status, 42 L. & Soc. Inquiry 1091, 1091 (2017) (“By and large, pro se claimants fail to receive materially meaningful access to justice.”); see also Emily S. Taylor Poppe & Jeffrey J. Rachlinski, Do Lawyers Matter? The Effect of Legal Representation in Civil Disputes, 43 Pepp. L. Rev. 881, 885 (2016) (concluding that “lawyers benefit their clients” while summarizing “the findings of empirical research on the effect of legal representation in nine areas: juvenile cases, housing cases, administrative hearings, family law disputes, employment law litigation and arbitration, small claims cases, tax cases, bankruptcy filings, and tort claims”). ↑

- .US. Const. amend. VI; Akhil Reed Amar, Forward: The Document and the Doctrine, 114 Harv. L. Rev. 26, 68 (2000). Discussing the right to counsel granted by the Sixth Amendment, Amar observes:

The specific Sixth Amendment right to counsel, and the overall architecture of the Sixth Amendment more broadly, aimed to save innocent defendants from erroneous convictions and to promote a parity of courtroom rights between the defendant and the government. At the Founding, an indigent defendant was entitled to government-paid counsel—namely, the judge—but as the adversary system sharpened and criminal law and procedure became more intricate, separate counsel became necessary to redeem the Amendment’s promise and purpose.

Id. (internal citations omitted). ↑

- .See Khari Johnson, The DoNotPay Bot Has Beaten 160,000 Traffic Tickets—and Counting, Venture Beat (June 27, 2016, 2:51 PM), https://venturebeat.com/2016/06/27/donotpay-traffic-lawyer-bot/ [https://perma.cc/TR3M-KEL5] (describing a bot “made to challenge traffic tickets”). ↑

- .See Beverly Rich, How AI Is Changing Contracts, Harv. Bus. Rev. (Feb. 12, 2018), https://hbr.org/2018/02/how-ai-is-changing-contracts [https://perma.cc/Q4DP-6TUE] (“The use of AI contracting software has the potential to improve how all firms contract.”). ↑

- .Asa Fitch, Would You Trust a Lawyer Bot with Your Legal Needs?, Wall St. J.

(Aug. 20, 2020), https://www.wsj.com/articles/would-you-trust-a-lawyer-bot-with-your-legal-needs-11597068042 [https://perma.cc/C9UB-KUSK] (describing a “wave of tech startups” built for “allowing [users] to draft documents or pursue smaller-value disputes without shouldering the high costs of hiring a lawyer”). ↑ - .Robert Weber, Will the “Legal Singularity” Hollow out Law’s Normative Core?, 27 Mich. Tech. L. Rev. 97, 99–101 (2020); Thomas Hedger, Should We Turn the Law over to Robots?, The Atl., https://www.theatlantic.com/sponsored/vmware-2017/robolawyer/1539/ [https://perma.cc/

6VHW-342B]. ↑ - .Emily Ryo, Representing Immigrants: The Role of Lawyers in Immigration Bond Hearings, 52 L. & Soc’y Rev. 503, 509 (2018) (“The research on legal representation in civil proceedings has long acknowledged the challenges associated with identifying the causal effects of legal representation.”). Pinpointing how and why lawyers matter is key to figuring out the extent to which artificial intelligence can replace lawyers. ↑

- .Quintanilla, supra note 1, at 1116. ↑