Artificially Intelligent Class Actions

Class actions are supposed to allow plaintiffs to recover for their high-merit, low-dollar claims. But current law leaves many such plaintiffs out in the cold. To be certified, classes seeking damages must show that, at trial, “common” questions (those for which a single answer will help resolve all class members’ claims) will predominate over “individual” ones (those that must be answered separately as to each member). Currently, many putative classes with important claims—mass torts, consumer fraud, employment discrimination, and more—are regarded as uncertifiable for lack of predominance. As a result, even plaintiffs with valid claims in these areas have little or no access to justice. This state of affairs is exacerbated by a line of Supreme Court cases beginning with Wal-Mart Stores, Inc. v. Dukes. There, the Court disapproved of certain statistical methods for answering individual questions and achieving the predominance of common ones.

This Article proposes a first-of-its-kind solution: AI class actions. Advanced machine learning algorithms would be trained to mimic the decisions of a jury in a particular case. Then, those algorithms would expeditiously resolve the case’s individual questions. As a result, common questions would predominate at trial, facilitating certification for innumerable currently uncertifiable classes. This Article lays out the AI class action proposal in detail. It argues that the proposal is feasible today; the necessary elements are precedented in both complex litigation and computer science. The Article also argues that AI class actions would survive scrutiny under Wal-Mart, though other statistical methods have not. To demonstrate this, the Article develops a new, comprehensive explanation of the higher-order values animating Wal-Mart and its progeny. It shows that these cases are best understood as approving statistical proof only if it can deliver accurate answers at the level of individual plaintiffs. Machine learning can deliver such accuracy in spades.

Introduction

By many accounts, Wal-Mart Stores, Inc. v. Dukes[1] killed “trials by statistics”—a family of adjudicatory techniques designed to facilitate class certification.[2] For a class to be certified, Rule 23 of the Federal Rules of Civil Procedure generally requires that “common” questions of law and fact must “predominate” over individual ones.[3] Yet for whole categories of putative classes in a range of important doctrinal areas—mass torts, consumer fraud, employment discrimination, and more—individual questions invariably predominate.[4] In a mass tort, for example, determining that the defendant’s asbestos proximately caused one class member’s cancer says little about the other members’ illnesses.[5] Instead, resolving the crucial question of causation for the whole class would require innumerable mini-trials. As a result, individual questions would dominate the merits litigation, so certification must be denied.[6] For reasons like this, untold numbers of meritorious claims in hard-to-certify legal areas simply evaporate. Class certification is unavailable, and without it, the claims cannot be economically litigated.[7] Potential defendants are thus allowed to violate the law with impunity, and victims are left without a remedy.

The plaintiffs in Wal-Mart, a Title VII sex discrimination case, proposed a trial plan—based on statistical adjudication—designed to efficiently dispatch that case’s individual questions.[8] Issues like whether each individual class member would have been promoted, but for unlawful discrimination, would be resolved using statistical sampling.[9] A random representative sample of the class would be chosen, their individual questions resolved, and their individual backpay awards determined.[10] Then, everyone in the class would be awarded the sample-average recovery, discounted by the sample-derived probability of a non-meritorious claim.[11] With this high-efficiency plan in place, common questions—like whether Wal-Mart maintained a companywide “policy” enabling discrimination—would again predominate in the litigation.[12] Rule 23 would be satisfied, and the class could be certified.

The Supreme Court disapproved.[13] It pejoratively dubbed the plan “Trial by Formula,” concluding that it violated Rule 23, the Rules Enabling Act, and Due Process.[14] By many accounts, this was the last word on new, creative, and certification-facilitating methods of statistical proof.[15] Such designs were forbidden, and any hope of revitalizing class litigation in hard-to-certify doctrinal areas was lost.[16]

This Article proposes a first-of-its-kind method of statistical proof designed to overcome Wal-Mart’s critique. In short, cutting-edge machine learning algorithms would efficiently resolve individual questions in class actions, thereby allowing common ones to predominate. Such artificially intelligent (AI) class actions would revolutionize aggregate litigation. By enabling class certification across a broad range of doctrinal areas, they would facilitate the vindication of countless meritorious—but currently unvindicable—claims. At the same time, they would help avoid recovery by plaintiffs who were not owed it. In doing so, AI class actions would serve the interests of plaintiffs, defendants, and society at large.

Why should AI class actions survive scrutiny under Wal-Mart when other methods of statistical proof have failed? To answer that question, this Article develops a new, comprehensive account of the higher-order principles animating Wal-Mart and its progeny. The cases’ stated logic is confusing—and perhaps confused. But careful analysis reveals that the Court did not flatly forbid ambitious, new statistical strategies for efficiently resolving individual questions. Instead, the case law is driven by a demand for accuracy in the resolution of claims—not only in the aggregate, but as to individual class members. After Wal-Mart, the Supreme Court continued to endorse a small handful of long-established statistical designs that it believed could produce such individual accuracy.[17] At the same time, it rejected novel approaches that could not adequately minimize individual error.[18] Modern machine learning simply is a collection of related algorithmic techniques for producing automated individual decisions with high accuracy. Thus, well-designed algorithms can deliver accuracy to at least the degree that the post-Wal-Mart Court has found sufficient—and likely do even better.

This Article proceeds in four Parts. Part I begins by recounting the rise and fall of statistical proof as a mechanism for enabling class certification. It discusses the pre-Wal-Mart experiments with sample trials. And it examines Wal-Mart’s rejection of that method of statistical proof, along with another method—a regression analysis—advocated by the plaintiffs in that case. In Wal-Mart and subsequent cases, the Court explained its acceptances and rejections of statistical proof by reference to preexisting substantive law. If the underlying substantive doctrine—Title VII, securities fraud, antitrust, etc.—permitted some form of statistical proof in an ordinary, individual lawsuit, then it could likewise be used in a class action. But if not, then it could not.

Part I shows why this substantive-law rationale cannot adequately explain the Court’s statistical-proof jurisprudence. In short, whenever courts encounter a truly novel method for proving a claim—statistical or otherwise—they must, and do, decide whether it ought to be treated as sufficient. Preexisting sources of substantive law rarely provide a definitive answer. Instead, higher-order values must inform the court’s decision to make new law—by either accepting or rejecting the novel method.

Finally, Part I develops a new account of those higher-order values animating Wal-Mart and the cases interpreting it. The Part argues that of the plausible candidate values, those associated with adjudicatory accuracy—fairness, efficient deterrence, corrective justice, and more—best explain the case law.

Part II introduces machine learning as a tool for resolving individual questions and facilitating class certification. First, it briefly summarizes how the newest generation of machine learning algorithms work. These advanced algorithms perform extraordinarily complex categorization and quantification tasks in essentially every sector of commercial and private life. They recognize individual people’s faces in photos,[19] power Siri and other digital assistants,[20] detect credit card fraud,[21] diagnose diseases,[22] determine creditworthiness,[23] and more. They do all of this by learning from “training data”—sets of sample inputs drawn from known cases and pre-labeled with correct determinations that the algorithm should mimic. The algorithms uncover complex relations between the sample inputs and pre-labeled outputs. And they then apply what they have learned to deliver high-accuracy determinations in new cases—those that neither the algorithm nor its human trainers have seen before.

Next, Part II describes how such algorithms would be used in class litigation. As in Wal-Mart, a representative sample of the class would be selected and their individual questions tried to the jury.[24] But instead of applying the sample’s average answers to every individual class member, the jury’s determinations would serve as labels for training data. Those labels, paired with the evidence that produced them, would be used to train an algorithm to mimic the jury’s decision function. Once trained, the algorithm would analyze the evidence relevant to the remaining, unsampled class members’ claims. Simulating the jury’s decision procedure, it would automatically answer the case’s individual questions as to all of the remaining class members—with high accuracy at the individual level. With individual questions minimized, the litigation could focus primarily on common questions, thus satisfying Rule 23’s predominance requirement.

Would such AI class actions survive scrutiny under Wal-Mart? Part III argues that they would. Their algorithmic decisions would be at least as accurate—and likely much more so—than the handful of statistical approaches that the Supreme Court continues to endorse. Part III also explores a weakened version of the AI class action proposal. Under it, algorithmic answers to individual questions would be merely presumptive—challengeable by either party. This design increases accuracy by allowing for error correction. And it simultaneously advances certain non-accuracy-related values championed in the literature on procedural justice—like legitimacy, dignity, and autonomy.

Part IV takes up normative questions. First, it argues that, unlike other legal applications of machine learning, AI class actions are relatively unlikely to generate or entrench invidious discrimination. The procedural and substantive safeguards of ordinary litigation would help to ensure that the training data—the evidence and the jury’s sample determinations—remained unbiased. An algorithm trained on such data would remain, to the same extent, bias-free. Second, the Part argues that, unlike with certain commercial uses of machine learning, we should not worry here about the “black box” nature of algorithmic “reasoning.” Juries’ reasons are similarly unknowable. We therefore employ procedural mechanisms to hold juries accountable and prevent them from relying on forbidden reasons. The algorithmic decisions in AI class actions would be subject to at least those same—and arguably stronger—protections.

I. Wal-Mart’s Critique of Statistical Proof

As noted above, Wal-Mart is commonly thought to have been the last stand for data-minded wonks set on finding new ways to satisfy Rule 23’s predominance requirement.[25] Forbidding “Trial[s] by Formula,”[26] the Wal-Mart Court abruptly ended years of attempts to open the courts to plaintiffs with high-merit, but low-dollar, claims.

This is not the only way to read Wal-Mart. The long-run implications of almost any Supreme Court decision are debatable, and Wal-Mart is an especially cryptic decision. The majority insisted that its holding turned exclusively on Rule 23(a)(2), which requires only that a putative class share “even a single common question.”[27] Finding that the case lacked any common questions, the court putatively did not reach the predominance issue at which statistical proof is usually aimed.[28] Under this reading, the Court’s objection to statistical proof was merely that it cannot transform an archetypal individual question—like who, in particular, was injured—into a common one.

This Article rejects that narrow, commonality-centered reading of Wal-Mart for two reasons. First, it is hard to square with basic Rule 23 jurisprudence. As Justice Ginsburg’s opinion pointed out, the plaintiffs in Wal-Mart quite obviously did present at least one important common question: Did Wal-Mart have a company-wide policy or practice governing pay and promotion that could serve as the foundation of a disparate impact claim?[29] The majority wrote that this was not a common question because the plaintiffs “provide[d] no convincing proof” of such a policy.[30]

This response, however, confuses the issue of whether plaintiffs present a common question with the issue of whether they can win on that question.[31] That distinction is commonplace in class actions. To prove securities fraud, for example, a plaintiff—or class of plaintiffs—must prove that a company’s statement was material to stock buyers.[32] And if plaintiffs fail to prove materiality, that is a reason to dismiss the case as meritless.[33] But it is not a reason to deny class certification for lack of commonality.[34] Indeed, in such instances, defendants may affirmatively insist on certification so that the whole class is bound by a dismissal with prejudice. Likewise, International Brotherhood of Teamsters v. United States,[35] on which the Wal-Mart majority relied,[36] was about the evidence necessary to prove the existence of a company-wide practice, and thus carry a Title VII claim.[37] Teamsters therefore shows why the question is common. Proving a company-wide policy—or failing to prove it—“will resolve an issue that is central to the validity of each one of the claims in one stroke.”[38]

A second reason to reject the narrow reading of Wal-Mart is that it risks making things too easy. If Wal-Mart held only that statistical proof cannot transform individual questions into common ones, that would be uncontroversial. Such a holding thus does not disfavor statistical proof, as such proof is normally used in class actions. As discussed above, the promise of statistical proof is that it could be used to achieve predominance in cases that do present common questions.[39] And it does so by efficiently answering individual questions, not transmuting them into common ones.[40] Thus, reading Wal-Mart narrowly weakens its critique of statistical proof substantially, making the most important uses of statistical proof trivially distinguishable.

Better then, for purposes of this Article, to treat the Wal-Mart majority as Justice Ginsburg did. On her view, the Court jumped ahead and resolved predominance under Rule 23(b)(3), rather than remanding the issue to the district court.[41] This reading places the majority’s denial of certification on firmer doctrinal footing: Even if the plaintiffs did present common questions about company policies, their proffered statistical proof was not an acceptable way—as discussed below[42]—to render those questions predominant. Moreover, this reading takes Wal-Mart seriously as a critique of statistical proof’s core use in class actions—as a facilitator of predominance. If this Article’s proposal for AI class actions can withstand scrutiny under that version of Wal-Mart, it can withstand scrutiny under any version.

A. The Rise and Fall of Statistical Proof to Facilitate Class Certification

The years leading up to Wal-Mart saw numerous experiments with statistical proof designed to achieve predominance and thus facilitate class certification. In particular, a handful of courts approved trial plans calling for the kind of “sampling” design that Wal-Mart would eventually quash.[43] In each case, a jury resolved the individual questions of a representative sample of the class, and those results were extrapolated to everyone else.[44] Academics have long favored this methodology because it imposes the correct aggregate damages award, despite inaccuracies at the level of individual plaintiffs.[45]

In Hilao v. Estate of Marcos,[46] for example, the parties conducted full trials for the torture, summary execution, and “disappearance” claims of 137 sample class members.[47] The jury found liability as to 135 of the sampled members and awarded damages to them.[48] The district court then awarded a lump sum of damages to the remaining class members, multiplying the total number of members by the proportion of the sample with valid claims, and then by the average sample award.[49] Other trial courts approved similar schemes, with the number of sample adjudications ranging between 13 and 2,000.[50] Some of these designs were upheld on appeal,[51] but others were reversed on grounds presaging Wal-Mart.[52] And in Wal-Mart itself, the Ninth Circuit had approved sampling as a viable method for resolving class members’ individual questions.[53] Ultimately, the Supreme Court disagreed, outlawing sampling as a method of class-wide adjudication for individual questions.[54]

B. Wal-Mart’s Under-Theorized Rule

Sampling was not the only method of statistical proof that Wal-Mart forbade. There, the plaintiffs also presented a regression model designed to show that the discretionary promotion and pay decisions made by thousands of individual managers caused discriminatory harm.[55] The plaintiffs’ expert conducted a “region by region” analysis, concluding that there were “statistically significant disparities between men and women at Wal-Mart . . . [and] these disparities . . . can be explained only by gender discrimination.”[56] But the Court rejected that method of proof, too.[57]

Wal-Mart’s explanation for rejecting these methods of proof was not rooted in Rule 23 per se, but rather in the Court’s understanding of substantive law.[58] As Justice Ginsburg noted, Title VII cases—including class actions—can succeed with a showing of disparate impact,[59] which regularly does involve regression analysis.[60] But in the majority’s view, such evidence was not permitted in a case like Wal-Mart. That was because, as the Court held, a disparate impact claim must challenge some “specific employment practice.”[61] The plaintiffs’ collection of millions of discretionary decisions did not qualify as specific enough.[62] Similarly, as to sampling, the Court found that Wal-Mart had a substantive right to insist on “individualized determinations of each employee’s eligibility for backpay.”[63] Sampling designs—although they impose accurate total damages awards—contain no mechanism for determining which individual plaintiffs’ claims are valid.

Ostensibly, then, the core principle of Wal-Mart is that procedural exigency cannot trump substantive law. As the Court wrote, “[T]he Rules Enabling Act forbids interpreting Rule 23 to ‘abridge, enlarge or modify any substantive right . . . .’”[64] Endorsing statistical proof for the sake of class certification, when it would not be allowed in an ordinary individual suit, would constitute such a modification. The Court also opined that using Rule 23 to deny a defendant’s substantive right to demand individualized liability determinations violated the Due Process Clause.[65] In sum, if some substantive doctrine forbids a method of proof in an individual suit, it is also forbidden in a class action.

Despite ending the recent experiments with sampling, Wal-Mart was not the last Supreme Court case to evaluate statistical proof in class actions. In a series of follow-on cases, the Court both upheld and reversed class certification decisions predicated on various—more traditional—statistical methods of proof.[66] In each case, the Court purported to rely on Wal-Mart’s “substantive law” principle.[67]

In Tyson Foods, Inc. v. Bouaphakeo,[68] the Court upheld the certification of a class of plaintiffs asserting claims under the Fair Labor Standards Act.[69] To certify the class, the district court had endorsed the plaintiffs’ statistical plan for proving the amount of time each class member spent donning and doffing protective equipment.[70] The plaintiffs commissioned a study to determine the average time required for such dressing and undressing.[71] They argued that this average speed should be imputed to every individual class member.[72] The Supreme Court approved. Citing Anderson v. Mt. Clemens Pottery Co.,[73] it held that substantive employment law allowed such statistical proof, at least under the right circumstances.[74] When employers are derelict in their legal duty to keep individual records of employees’ work, they cannot then object to plaintiffs’ reliance on aggregate figures.[75] Instead, in such cases, “representative evidence . . . [is] a permissible means of” proving hours worked.[76]

Likewise, in both Amgen Inc. v. Connecticut Retirement Plans & Trust Funds[77] and Halliburton Co. v. Erica P. John Fund, Inc.,[78] the Supreme Court reaffirmed the “fraud-on-the-market” method of proving individual reliance in securities fraud cases.[79] Under it, if one can show general market reliance—proved using sophisticated regression models of price movements[80]—one may presume that every individual class member likewise relied.[81] The Court again endorsed this method of aggregate proof not because Rule 23 itself authorizes it but because substantive securities law does.[82]

In Comcast Corp. v. Behrend,[83] the Court rejected the plaintiffs’ regression model designed to show class members’ pecuniary losses from Comcast’s monopolization.[84] The problem was not that regression analysis is generally forbidden to prove antitrust injury. Such analyses are regularly used in antitrust cases, both collective and individual.[85] But the Comcast Court rejected the regression model because it failed to match the plaintiffs’ substantive theory of monopolization.[86] The model presupposed four theories of injury-causing monopolization, but three of the four were ruled invalid.[87]

In the end, though, the “substantive law” explanation of Wal-Mart and its progeny cannot tell the whole story. Certainly, if a preexisting and directly-on-point substantive authority blesses or condemns a method of proof for vindicating a variety of claim, procedural considerations cannot override. But when litigants offer new methods of proof—or old methods applied in new ways—there will be ample room to disagree—as the Justices have—about how precedent applies.[88] And sometimes, the question presented will be whether the precedents were right in the first place.[89] In all of these situations, some court must bless or condemn, in the first instance, the proffered proof.

This is, of course, how the traditional methods of statistical proof that have survived Wal-Mart scrutiny first arose. All of them—disparate impact,[90] “fraud-on-the-market,”[91] the Mt. Clemens presumption,[92] and antitrust market reconstruction[93]—are judicial creations. The creation of such methods is the classic province of the judiciary. Legislators define the elements of a claim; courts determine what constitutes acceptable proof of them.[94]

Thus, the question for a court applying Wal-Mart to a new proposed method of statistical proof is not whether preexisting substantive law already permits it. The question must instead be whether substantive law ought to be interpreted to permit it.[95]

To resolve that kind of question, courts must appeal to higher-order principles. What values are served by understanding the defendant in Wal-Mart as lacking a “specific employment practice”—Title VII’s prerequisite for regression-based proof of discrimination?[96] What harm would arise from understanding a policy of managerial discretion as constituting such a specific practice?[97] More broadly, what values are served by endorsing the abbreviated procedures of statistical proof—and thus facilitating class certification—rather than insisting on an individual mini-trial for every individual question?

Wal-Mart and the Supreme Court cases interpreting it supply few answers. Or perhaps it is better to say that their answers are implicit, surfacing only occasionally from beneath the cases’ stated reasoning. But implicit hints are not enough. In order to evaluate new methods of statistical proof, one needs a strong, explicit understanding of the principles underpinning the Wal-Mart line of cases.

C. A Theory of Wal-Mart

This subpart supplies the missing theory of the values driving Wal-Mart and its progeny.

1. Three Sets of Candidate Values.—

Observe that every proposed method of statistical proof is also a proposal for abbreviated adjudicatory process. In class actions, the whole point is to avoid individualized processes—like mini-trials—that would cause individual questions to predominate over common ones. And defendants, to defeat certification, insist that such arduous, member-by-member processes are indispensable.[98] Wal-Mart and its progeny, then, are intrinsically about what kind of judicial process—and how much—is necessary in a given set of circumstances.

A substantial scholarly literature on procedural justice is devoted to investigating the values that are served—or undermined—when adjudicatory process is increased or decreased.[99] Lawrence B. Solum has helpfully typologized those multifarious values into three rough categories: accuracy values, process values, and cost values.[100] As is often the case in law, not all of these families of values can be maximized simultaneously.[101] Thus, legal rules often embody judgments about which values matter most in a given set of circumstances. And as will be demonstrated, accuracy values drive Wal-Mart and subsequent rulings on statistical proof.

Accuracy values are vindicated, unsurprisingly, as adjudications become more accurate.[102] Fulsome judicial process allows more evidence to be entered, the evidence to be scrutinized more closely, and—at least potentially—decision-makers to more often decide correctly.[103] Accuracy values include, among others: efficient deterrence, fairness, equality of outcome, desert, and corrective justice.[104] When legal decisions are accurate at the level of individual plaintiffs and defendants, both parties are efficiently deterred from engaging in socially costly behavior.[105] It is also more fair, just, and equitable when only those plaintiffs who deserve judgments receive them.[106] Like cases ought to be resolved alike.[107] Finally, principles of corrective justice dictate that individuals who were wrongfully injured should be made whole.[108] But injured parties are not fully restored if they are forced to split their damages with uninjured parties or if they go uncompensated by the hand of rough procedural justice.

Process values, by contrast, treat participation in the judicial process as an inherent good, regardless of its relation to accuracy.[109] Some scholars contend that all apparent process values are actually reduceable to accuracy values.[110] But as Solum has argued, truly independent process values do exist.[111] Chief among these is legitimacy.[112] A legal system must allow some quantum of procedural participation in order to be considered legitimate by the polity.[113] This is, in part, because providing access to process demonstrates that the legal system is committed to additional democratic values—like human dignity, equal treatment, and autonomy.[114] Of course, all the process in the world will not legitimize a system if that process does nothing to facilitate legally correct outcomes. Thus, while process values may not be reducible to accuracy, they do in some sense depend on having a relationship to accuracy.[115]

Cost values are just what they sound like. Adjudicatory resources are not infinite. No government in history has had the resources to stage a full-dress trial before deciding whether to issue each and every driver’s license or before approving each and every claim for benefits.[116] Thus, governments must allocate resources in a way that “ensure[s] that the systemic costs of adjudication are not excessive in relation to the interests at stake in the proceeding or type of proceeding.”[117]

2. Accuracy Values Dominate the Wal-Mart Analysis.—

So, which set of values animates the Supreme Court’s statistical-proof rulings in Wal-Mart and subsequent cases? The answer must be accuracy values. The Court’s pattern of rulings is readily explained by reference to accuracy values. But analysis of this constellation of decisions using only pure process or cost heuristics renders the pattern incomprehensible. To be sure, those values matter generally to the structure of class actions. But they cannot differentiate between class actions where statistical proof is permitted and those where it is forbidden.

Consider the statistical methods of proof that the post-Wal-Mart Court has approved. First, the fraud-on-the-market presumption. At its root, the Court’s endorsement of that presumption rested on its assessment that “most investors—knowing that they have little hope of outperforming the market . . . —will rely on the security’s market price as an unbiased assessment of the security’s value.”[118] That is, the Court endorsed the fraud-on-the-market presumption because it believed the presumption to be quite accurate. It was accurate enough, the Court believed, that defendants might, at most, “attempt to pick off the occasional class member here or there through individualized rebuttal.”[119]

The same can be said for statistical methods of proving antitrust injury. Models designed to show antitrust injury are conceptual siblings of fraud-on-the-market. In both cases, a plaintiff class shows, through statistical evidence, that the defendant’s malfeasance adversely affected most market participants.[120] Having proved that, the plaintiffs are then entitled to assume that every market participant was likewise injured.[121] Just as with securities fraud cases, it is plausible that some antitrust plaintiffs escaped injury via idiosyncratic market engagement.[122] But the same logic—that consumers generally rely on market prices—applies to suggest that those instances are rare.

Likewise, the Court’s disapprovals of methods of statistical proof are easily explained in terms of accuracy. The Wal-Mart Court objected to the plaintiffs’ regression analysis because it showed sex-based disparities only at the regional level.[123] Thus, the Court thought, those disparities could have “be[en] attributable to only a small set of Wal–Mart stores, and [could] not by [themselves] establish . . . uniform, store-by-store disparity.”[124] Factors like individual managers’ advancement philosophies or store-by-store differences in applicant pools made it unlikely, the Court thought, that every plaintiff suffered the regional average harm.[125] Instead, the Court reasoned that many individual plaintiffs probably suffered no discriminatory harm at all.[126] In statistics, such erroneous imputation of population-level effects to individuals in the population is called “aggregation bias.”[127]

This accuracy rationale also helps to flesh out the Wal-Mart Court’s interpretation of substantive Title VII doctrine. Recall that Title VII sometimes allows statistical proof to show a disparate impact, but only if the plaintiff ties that impact to some concrete employment “policy.”[128] A unified testing procedure for determining hiring and promotion is such a “policy.”[129] Even some “common mode of exercising discretion that pervades the entire company” will do.[130] But the mere fact of discretion—with innumerable, manager-by-manager differences in its exercise—is not concrete enough. Allowing statistical proof, but only if tied to a single concrete causal theory, is an accuracy-improving move. If all employees were subjected to a single treatment, then it becomes more plausible that gender disparities were caused by that treatment and not by legally inoperative factors like labor-pool composition.[131] The application of a uniform treatment also makes it less likely that some women suffered extremely harsh injuries, while others suffered none.[132]

Accuracy values also easily explain Wal-Mart’s rejection of sampling. Sampling as a method of proof suffers from obvious aggregation bias. It cannot, by its own terms, distinguish between plaintiffs that had a claim and those who do not—nor can it assess variations in damages.[133] Instead, sampling treats every unsampled class member as identical to the sample average, granting everyone the average damages, discounted by the probability of an invalid claim.[134] Every disparity between the sample average and the true fact-of-the-matter thus constitutes an inaccuracy at the individual level.

True, sampling is accurate along one dimension—producing the correct total damages. But that is not enough to vindicate all of the values associated with accuracy. As described above, values like fairness and corrective justice require figuring out who in particular was harmed and by how much.[135] And in some contexts, efficiency might require judgments to be accurate as to both the defendant and the plaintiff. A sampling scheme that compensates plaintiffs without a valid claim may create a moral hazard by rewarding plaintiffs who fail to take cost–benefit-justified precautions.[136] These factors can explain why the Court found that Title VII guarantees a right to demand “individualized determinations of each employee’s eligibility for backpay.”[137]

A similar story emerges when one compares Comcast—which rejected a statistical market model to prove antitrust injury and damages[138]—with cases that approve such models. In Comcast, accuracy was at the core of the dispute between the majority and the dissent. The majority thought that the model was wildly inaccurate because the model presumed the truth of four theories of monopolization, even though three of these theories were previously ruled meritless.[139] But in the dissenters’ view, if any of the theories was viable, then all supercompetitive pricing could be attributed to the valid theory of monopolization.[140] In other words, the model’s viability depended on whether it accurately represented the class members’ injuries.

Tyson, too, is best explained as promoting accuracy-related values. The Court in Tyson held that if an employer fails to maintain legally mandated timekeeping records, plaintiffs may assume that each class member worked the average amount.[141] Normally, treating every employee as average risks individual inaccuracy from aggregation bias—as the sampling design in Wal-Mart showed.[142] But Tyson acts as a prophylactic against an inaccuracy-promoting moral hazard. Absent Tyson, employers would have a strong incentive to eschew the required recordkeeping, which would make class certification impossible, thereby allowing employers to avoid liability for small-dollar underpayment claims. The Court’s ruling penalizes such behavior by making class certification easier.[143] This provides an ex ante incentive for employers to keep legally mandated records, and in the long run, those records increase the accuracy of determinations about the validity and size of individual claims. And even in Tyson itself, the Court viewed the aggregation error introduced by the prophylactic scheme as modest.[144] The variance in “donning and doffing times” between the fastest and slowest workers was only about “10 minutes a day.”[145]

Contrast these straightforward, accuracy-motivated explanations of Wal-Mart and its progeny with pure process-motivated explanations. Did the proof by regression that Wal-Mart rejected threaten process values like legitimacy, autonomy, dignity, or equal treatment? If so, it could only have been by depriving the parties of the opportunity to participate in the resolution of the class members’ individual questions. But then what about the many Title VII class actions in which statistical proof of a disparate impact is allowed?[146] The only difference between those and Wal-Mart was the concreteness of the employer’s hiring and promotion policy.[147] It is highly implausible that, say, legitimacy or dignity is threatened by statistical proof in a case without a concrete hiring policy, but not in a case with one.

The same logic applies to every other post-Wal-Mart case. Process values cannot plausibly allow for abbreviated statistical proof in securities fraud cases but forbid it in non-securities fraud cases.[148] Nor can process values demand individual mini-trials on antitrust injury in cases with a mismatch between market model and theory of monopolization, but not in cases with a close match.[149] Process values like legitimacy, autonomy, dignity, and equal treatment simply have nothing to do with the differences between these cases. They cannot provide an account of why statistical proof is allowed where it is allowed and forbidden where it is forbidden.

Indeed, in many of these cases, the case law seems to get pure process values backward. Surely if anyone was treated without dignity, it was the employees in Tyson. Their employer not only underpaid them but also tried to cover its tracks and torpedo their claims by failing to keep proper records. If anyone was entitled on dignitary grounds to be heard during a day in court, it was them. Yet the rule of Tyson is the opposite. The statistical proof allowed there ensured that none of the disaffected employees would participate in the judicial process about donning and doffing time. In the real world, of course, most of the employees were perfectly happy with this arrangement. In exchange for reduced process, they got justice—damages for wages wrongfully withheld.

Such an exchange—process for access to the courts—is inherent in every class action. Cost values often demand that participation in process be reduced to promote judicial economy.[150] This explains why, for example, comparatively low-stakes, low-complexity administrative claims are often decided with very little process.[151] The reality of limited resources does not necessarily lead to a crisis of process values. That is, a legal system does not become illegitimate—nor forsake autonomy, dignity, or equal treatment—simply because it makes reasoned concessions of process to cost.[152]

Class actions themselves are the perfect example of such a reasoned trade-off. In authorizing them, Congress recognized that the ordinary rules of litigation made it economically impractical—for both individuals and the courts[153]—to resolve certain smaller claims.[154] And the reasoned solution—class-wide litigation—by its very design contemplates a drastic reduction of participation in process. In an idealized class action, the plaintiffs would present only common questions. Then, the claims of everyone in the class would be disposed of simply by adjudicating the merits of the named plaintiffs’ claims. The defendant and the named plaintiff would participate fully, but no one else would participate at all.[155]

Cost values are therefore crucial to understanding class actions generally. But as with process values, cost values are little help in differentiating which class actions may be litigated using statistical proof. It is difficult to formulate an argument, for example, that judicial resources should be economized in securities fraud cases, but not for consumer fraud.

Thus, only accuracy values adequately explain the Supreme Court’s pattern of decisions in and after Wal-Mart. The lesson is that accuracy must be the lodestar for any new proposed methods of statistical proof designed to facilitate class certification. Methods that can provide sufficient accuracy at the level of individual class members should survive scrutiny under Wal-Mart. And those that cannot will not.

II. AI Class Actions

After Wal-Mart, the problem apparently remains: Aside from the handful of contexts where the Court has endorsed high-accuracy statistical proof, class certification in a range of important doctrinal areas appears impossible.[156] Putative classes of people who were exposed to harmful products—like asbestos—cannot be certified when members’ claims present disparate questions of exposure and injury.[157] Wal-Mart itself shows the persistent difficulty of certification when a class alleges company-wide discrimination by many individual actors.[158] And unlike in securities fraud, class members in garden-variety fraud cases enjoy no presumption by which reliance can be assumed as to every individual class member.[159] Deprived of creative approaches like sampling, there is no obvious way to avoid the obstacle to certification that these individual questions pose. And without class certification, many meritorious claims will go unvindicated.

This Part proposes a new method for efficiently resolving individual questions and achieving class certification: AI class actions. In short, cutting-edge machine learning algorithms can be trained to provide high-accuracy, plaintiff-by-plaintiff answers to individual questions—like medical causation, individual discrimination, or reliance. They can accurately determine whether a particular class member—as opposed to the average one[160]—relied, for example, on a fraudulent misrepresentation. With quick, machine-assisted decision-making available, district courts can confidently rule that, come trial, common questions will predominate over individual ones. Thus, the otherwise uncertifiable classes are rendered viable.

A. Machine Learning: A Brief Overview

“Machine learning” is a broad term, encompassing a number of related algorithms that automatically improve themselves in response to data.[161] The term is capacious enough to cover, for example, classic linear regression, which is often considered one of the simplest machine learning techniques.[162] This Article, however, uses “machine learning”[163] to refer to the more complex, advanced learning algorithms that have recently begun to permeate nearly every corner of society.

Advanced machine learning algorithms power Siri and Alexa, converting sound waves into text and natural language into commands a computer can execute.[164] They detect unauthorized credit card use and identity theft.[165] They evaluate loan applications for creditworthiness.[166] They can recognize objects or even individual faces in photos, enabling services like Google’s image search and Facebook’s automatic tagging.[167] And they are even used in certain courthouses to help determine who should be released to await trial and who should remain jailed.[168] This is just a small sample of where machine learning is—and will soon be—used.

This new[169] generation of learning machines includes a number of specific and technically distinct algorithms—with names like “neural network,” “random forest,” “gradient booster,” and more.[170] But despite their differences, these cutting-edge techniques share important conceptual similarities. At their cores, they are methods for automatically uncovering fantastically complex correlations in data and then making high-accuracy determinations about what the data represent.

These algorithms optimize themselves, learning from sample data.[171] Training generally takes the following form: First, a set of training data is collected. This data consists of example decisions or relations that the user wishes the machine to emulate. It includes both the input features to be analyzed and the correct decision in each instance.[172] For example, to train an algorithm to recognize photos of dogs, the training data would comprise a set of photos pre-labelled as either featuring a dog or not. A loan-issuance algorithm might be trained using a set of known loan applications pre-labelled as to their actual creditworthiness.[173]

Using semi-random processes, the algorithms generate a set of complicated relationships between inputs and outputs, thus producing tentative decisions.[174] They then compare those tentative decisions against the provided correct ones. Using an “error function,” they measure how good, on the whole, the decisions were.[175] Then, using an “optimization function,” the machines update their correlations between inputs and outputs, and they try again.[176] Training stops when error is minimized—that is, when the machine’s outputs match the provided correct ones as closely as possible.[177] The trained algorithm is then tested against another training dataset—one that was “held out” and not used in the initial training process.[178] If the algorithm outputs the correct decisions for that set, this is evidence that its decision function is not “overfitted” to the training data.[179] That is, the algorithm is likely to make accurate determinations about new cases—those for which the correct answers are not already known.[180]

Advanced machine learning differs from, for example, classic linear regression in two important ways. First, advanced machine learning algorithms’ decision functions can be radically nonlinear.[181] That is, they do not attempt to fit the relations of inputs and outputs to any pre-defined, easy-to-interpret type of function.[182] As a result, they have the ability to reduce the errors in their results much more than is possible with older-fashioned techniques.[183] Second, these algorithms can automate feature selection. Classic linear regressions depend on designers to make good guesses about which independent variables will best predict the dependent ones.[184] Advanced algorithms can analyze many potential independent variables, determine which—individually or in combination—are the best predictors, and ignore the others.[185]

The arguments of this Article transcend the technical differences between different advanced-learning machines. Instead, the Article speaks generically of “algorithms,” referring to the whole family of methods that operate on the above-described principles. Particular cases may often raise disagreements about which of the available algorithms ought to be used and who should decide. But so long as there are reasonable methods for resolving such disagreements—and there are[186]—any algorithm described above will do. Algorithmic ecumenicism lends the arguments here some quantum of future-proofness. Technical advances in machine learning are proceeding at a blinding pace. But so long as those advances preserve most of the basic cross-algorithmic features described above, the insights of this Article remain valid.

B. How Algorithms Can Facilitate Class Certification

Now we come to the heart of the proposal. Recall that the key to achieving the predominance of common questions is resolving individual ones without having to resort to resource-intensive procedures like mini-trials.[187] Advanced machine learning algorithms could be trained to efficiently resolve such questions and thus satisfy Rule 23’s predominance requirement.

How would this be done? First and most important is the issue of the training data. Consider the individual medical-causation questions that preclude class certification in mass tort suits.[188] To train a cancer-causation algorithm, one needs a set of real claimants, each labeled with the correct answer as to whether they can show causation. Thus, the training data could comprise a sample set of determinations about causation made by the actual jury in the case.[189]

This scheme should sound familiar. Wal-Mart-style sampling designs also relied on jury determinations of a subset of class members’ individual questions.[190] AI class actions would similarly require the random selection of a statistically significant sample[191] of class members. Those class members’ claims would then be tried. Exactly what such a trial would entail would vary—as trials always do—with the theories presented and the evidence proffered. One can imagine that medical-causation claims might be largely decided on the basis of documentary evidence: work history, medical records, demographic information, and the like. Perhaps live testimony would be required from some of the sample class members. If the chemical exposure happened on the job, the jury might wish to know what exactly each job description entailed. At this stage, the judge should err on the side of admitting relevant information about the sample class members into evidence. This serves more than the normal evidentiary purpose of giving the jury a robust picture of the claimants. As discussed below, it also helps to produce a wealth of machine-digestible information for training the algorithm.[192]

At the end of the sample trial process, the litigants would possess a set of sample class members, a variety of evidence relating to their causation questions, and the jury’s actual determination of causation as to each. This constitutes the training data. Then, training commences. A subset of the sample cases is set aside for later use in validation.[193] The remainder of them are fed to the algorithm.[194]

To accurately mimic human decisions, algorithms do not need to be fed all of the information that the human jury saw. Likely, many features of the training data have little predictive power and will be weeded out in feature selection. But algorithms can also perform well without being fed certain features with significant predictive power. As Talia Gillis and Jann Spiess have recently shown, “when complex, highly nonlinear prediction functions are used, . . . one [omitted] input variable can be reconstructed jointly from the other input variables.”[195] Sometimes this is a bad thing, as when algorithms are able to reproduce humans’ racially biased decisions despite not being fed racial data.[196] The algorithms are essentially able to infer people’s race and make decisions based on race by uncovering complex racial correlates within the data the algorithms do have.[197]

But other times—as with AI class actions—such inferences can be a very good thing. Algorithms designed to issue loans or screen job candidates would add little efficiency if, to make their decisions, they depended on inputs derived from lengthy in-person interviews.[198] Luckily, they do not.[199] Instead, they consume easily collected information—resumé, job history, income, credit score, etc.—and use just that to mimic interview-informed human decisions.[200]

For these same reasons, it would make little sense to train the causation algorithm to rely on, for example, video of live questioning.[201] The whole point of AI class actions is to efficiently resolve individual questions across the entire class. The trial plan therefore cannot call for every individual class member to be deposed so that the algorithm can interpret their depositions. Instead, the algorithm should be trained using only data that can be procured with relative ease as to each of the class members. In the case of medical causation, this would likely include things like medical and employment records. But it could also include, for example, questionnaire-based testimony—made under oath—from plaintiffs, which is already used in class litigation.[202]

So, the algorithm would be trained using the jury’s sample decisions paired with the subset of easily collected and machine-digestible evidence relating to those decisions. As described above, the algorithm would generate a set of fantastically complex and semi-random relationships between the evidentiary inputs and use them to guess at some outputs.[203] It would measure errors in its first set of guesses.[204] Then, using its optimization function, it would update the complex set of relations between inputs and outputs and guess again.[205] This process continues until error is minimized, signaling that the algorithm has generated a decision that matches the jury’s as closely as possible.[206]

Finally, the trained algorithm would be tested against the hold-out set of training data. The causation evidence of those held-out members would be fed to the machine, and the machine would output determinations.[207] If the algorithm accurately resolved those causation questions—which it had never before seen—that would be good evidence that it would perform well across the entire class.[208] That is, the algorithm would be able to simulate—with high accuracy—the jury’s determination about whether each individual class member could show medical causation.

This sounds like speculative fiction, but it is not. Algorithms already exist that can accurately resolve thorny legal questions. For example, a team of professors at the University of Toronto Faculty of Law have successfully used machine learning to predict tax law decisions.[209] Their “Blue J” algorithm can determine, among other issues, whether a given individual is rightly classified as an employee or an independent contractor.[210] This is exactly the kind of commonplace but fact-intensive legal question that algorithms would be asked to answer in AI class actions.

How does Blue J perform? “Exceptionally well.”[211] Given just twenty-one facts about a given worker, the algorithm can correctly classify well over ninety percent of workers as either employees or contractors.[212] This high performance persists when Blue J is turned loose on “a variety of different questions in tax law.”[213] Other academics have applied algorithms to much harder legal problems—for example, predicting appellate and apex court decisions.[214]

Like Blue J, a trained algorithm in an AI class action would efficiently resolve individual questions as to each individual class member. Assume for now that these algorithmic decisions would, like a jury determination, be the final word on each individual issue.[215] This straightforwardly illustrates how machine learning would facilitate class certification where it is currently considered impossible. Issues—like medical causation, reliance, or intent—which presently require individual adjudications for every class member would instead be resolved with extreme efficiency. Only a small sample of class members’ individual questions would be tried to the jury. Everyone else’s questions would be permanently resolved by a computer in essentially no time at all. And this would be the end of the story. With individual questions thus minimized at trial, district courts could rule confidently that common questions predominated, satisfying Rule 23.

C. Generating Sufficient Training Data

Some readers may wonder whether conducting sample trials would really be a feasible way to produce enough data to adequately train an algorithm. Some machine learning questions are harder than others. Thus, when applying an algorithm to a totally novel problem, it is often difficult to know in advance how much training data will be needed.[216] And some problems are so difficult that no amount of training data currently suffices.[217] If the individual questions presented in AI class actions required tens of thousands or millions of instances of training data, then the proposal would be dead on arrival.

There are good reasons to think that, in AI class actions, reasonable amounts of data would suffice. First, the kinds of individual questions to be resolved in a class action are not unprecedented in the world of machine learning. As already noted, the Blue J algorithm can accurately apply settled legal tests to complex factual situations.[218] Common individual questions in class actions—medical causation, reliance, etc.—work the same way, suggesting that similar amounts of training data might be required.[219] Blue J was trained using the entire corpus of relevant Tax Court of Canada decisions—numbering 600.[220] This is well within the range of the feasible, as far as sample adjudications go.[221] Recall that pre-Wal-Mart courts approved as practicable as many as 1,150 hearing-based sample adjudications of individual questions.[222] That number jumps to 2,000 for adjudication based on documentary evidence.[223]

Even smaller datasets might often be sufficient. Blue J used all of the readily available data, but it is possible that it could have done as well with less. Among data science professionals, an oft-cited rule of thumb is that one needs roughly ten training instances for every “feature”—every category of input evidence.[224] Blue J makes accurate contractor/employee assessments based on just twenty-one features about each worker.[225] This suggests a minimum number of training cases in the low 200s. Empirical studies for other machine learning applications—classifying medical images, spotting ads—likewise show minimum datasets around the 200 range.[226]

More importantly, if AI class actions become more prevalent, there are strong reasons to expect more and more individual questions to be resolvable with less and less data. The problem of “small data”—training accurate algorithms using modest datasets—is on the bleeding edge of machine learning research. New techniques are being invented all the time. Of particular relevance here is the technique of “transfer learning.”[227] In transfer learning, an algorithm is trained to answer a question for which lots of data is available.[228] Then, using a small dataset, it is partially retrained to answer a similar question.[229] Transfer learning can reduce the amount of data required to answer the latter question by orders of magnitude.[230]

It is easy to imagine, in a world of widespread AI class actions, that a market would develop for transfer-learning-ready algorithms. Such a market already exists for third-party expert models in, for example, antitrust and securities cases.[231] Private entities—like litigation consulting firms—could similarly train general-purpose algorithms to answer questions of medical causation, reliance, or discrimination. They could rely on vast troves of existing data, both in case law and privately held—for example, by insurance companies that pay tort settlements.[232] Then, the task of adapting a general-purpose medical-causation algorithm to mimic a particular jury’s causation decisions would require very little new data.

D. Litigating Algorithmic Design

Even given sufficient training data, machine learning algorithms are not self-constructing. Talented human data scientists craft them and, in that process, make many granular choices about their design. If AI class actions are adopted, these choices will surely give rise to disputes between litigants.

David Lehr and Paul Ohm have helpfully catalogued the steps to construct a fully functioning machine learning algorithm.[233] Not all of these are likely to raise trouble in class litigation. For example, in AI class actions, there will be no disputes about the “problem definition”—the question the algorithm should be trained to answer.[234] Here, unlike in other machine learning contexts, the answer is clear: The machine will mimic the jury’s answers to individual questions in the sample set.[235]

Disputes over algorithmic design choice are likely to fall into two categories: data collection and preparation, and model training.[236] As to the first, parties may disagree about whether certain data—like work history, medical records, or survey responses, discussed above[237]—is accurate. “Accurate” here has a few distinct meanings. Data may be inaccurate if it was recorded in error;[238] think of a medical record where a doctor has accidentally checked a box denoting hypertension. Data also may be inaccurate if it is a poor proxy for the information that truly matters;[239] obesity is correlated with diabetes, but the former certainly does not imply the latter. Or data may be accurate along both of those dimensions but suffer from statistical deficiencies like lack of randomness or representativeness.[240] Moreover, when data does suffer from some accuracy problem, there are multiple ways to fix it. Inaccurate instances might be “corrected” using approximate values, dropped entirely, or supplemented with additional data.[241] As for conflicts about model training, there are many contestable options for which machine learning algorithm to use, how to partition training and testing data, and how (or how much) to fine-tune algorithms after an initial round of training.[242]

Problems like these may sound daunting, but they are not foreign to complex civil litigation. Some could be resolved by applying basic evidentiary rules. Rule 401 of the Federal Rules of Evidence requires that evidence be relevant.[243] Data that has been systematically misrecorded or that has no correlation to operative facts fails that test. And data with only a weak correlation to the facts that matter might be excluded under Rule 403 for its potential to confuse or prejudice the jury.[244]

Other disputes would likely be resolved via “battles of the experts.” In such battles, both parties submit their own expert opinions about proper algorithmic design. The competing opinions are evaluated by some combination of the court—applying the Daubert test[245]—and the factfinder—choosing the best argument that survives Daubert. These battles are not new, either. Indeed, in complex litigation—including class actions—they are regularly fought specifically about model design. Older-fashioned statistical models—like traditional regressions—are mainstays of securities, antitrust, and disparate impact litigation.[246] These models, like advanced machine learning algorithms, can only be as accurate as the underlying data. They therefore also raise opportunities for expert disagreement about whether faulty data has been adequately corrected. Likewise, as with training a machine learning algorithm, traditional models rely on their designers’ decisions about how best to make the model fit the data. Different regression techniques, for example, fit the data to different types of curves.[247] Which technique to use is a matter of expert discretion.

Some readers may worry that courts and factfinders are ill-equipped to referee expert debates over technical issues of algorithmic design. But the reality is that they already do this sort of thing all the time. The world is a technical place. And governing it increasingly involves technical thinking to be embedded in legal rules and decisions. Certainly, if AI class actions become widespread, there will be an adjustment period as courts develop doctrine about what constitutes credible scientific practice in algorithmic design. Such bumps in the road, however, are the cost of admission if generalist judges are to continue playing any major role governing our increasingly complex world.

There is also another, machine-learning-specific, mechanism by which some disputes over algorithmic design might be resolved. Call this “battle of the algorithms.” Many of the above-described disputes can be boiled down to the following conflict: Which design approach—the plaintiff’s or the defendant’s—will produce a final algorithm that most accurately mimics the jury’s decision procedure? This is a testable proposition. To find the answer, courts could borrow the approach of Kaggle—a Google subsidiary—and conduct a competition.[248] Both parties would train their preferred version of the algorithm. Then, both final versions could attempt to predict the jury’s decisions about the hold-out set of training data.[249] The party with the most accurate results[250] would win, and that algorithm be adopted for the remainder of the AI class action.[251]

E. Settlement Dynamics

For all the talk above about how machine learning could be used at trial, actual examples of its use—if AI class actions were adopted—would likely be rare. Trials, of course, are rare in civil lawsuits generally—and vanishingly so in class actions.[252] Instead, nearly all cases settle. Thus, rather than becoming a mainstay of civil adjudication, the primary effect of introducing algorithmic decision-making into class actions would be a shift in settlement dynamics.

Settlements reflect parties’ best estimates of the litigation’s value.[253] Under the prevailing post-Wal-Mart paradigm, those values are, for many categories of cases, pushed below the efficient level. Again, this is because, for many small but meritorious claims, expected recoveries exceed litigation costs only if litigable as a class action.[254] Thus, when class-wide adjudication is impossible, the value of those claims is arbitrarily pushed to zero. And even for claims with positive value in individual litigation, losing the efficiencies of the class form significantly reduces their value. Thus, the settlement value of a collection of claims depends significantly on the probability of certification.

If machine learning is introduced into class actions, the class vehicle will become newly available across a wide swath of claims in a number of doctrinal areas.[255] This eliminates the extra costs of individual litigation and pushes settlement values up. Defendants, obviously, will not be pleased with that effect.

But there are good reasons to believe that such an increase in settlement values would be good for society as a whole. Presumably, when legislators[256] create legal liability, it is in response to socially costly activity. That is, they judge the effects of some activity to be harmful, and they give those affected the right to recover for that harm. When plaintiffs recover their actual damages, this generates efficient deterrence and also serves other values like corrective justice.[257] Thus, when litigation costs artificially push down recoveries—sometimes to zero—that counts as suboptimal.

But what about claims by which plaintiffs can recover more than their actual damages?[258] Is pushing up settlement values for those claims a good thing? One reason for imposing damages above actual harm is in an attempt to achieve optimal deterrence, even in the face of otherwise-suboptimal enforcement.[259] And if the main reason legislators originally anticipated suboptimal enforcement was because of hurdles to class certification, then removing those barriers might result in over-enforcement. However, there are many other possible sources of underenforcement, most importantly the under-detection of violations.[260] Moreover, there are other reasons to increase damages above actual harm. Doing so might deal justice for particularly onerous wrongs or promote the moral repair of hard-to-value harms.[261] We therefore ought not worry very much that by pushing settlement values up, AI class actions would inadvertently work some social ill.

Increased settlement values would be a gift to plaintiffs. But AI class actions could also benefit defendants in certain settlement situations. Occasionally, defendants argue, classes are certified before the parties have determined which putative class members were injured and which were not.[262] And an order granting certification can lead to an immediate “‘in terrorem’ settlement[].”[263] These factors combine to produce the possibility that when some classes are certified, defendants overpay.

Machine learning algorithms can help parties negotiating settlement avoid both underpayment and overpayment. Just as in an AI-driven trial, where algorithms would sort valid claims from invalid ones and estimate plaintiff-by-plaintiff damages, they could do so during settlement. In fact, the process in settlement would be even more straightforward. Freed from the need to emulate a particular jury’s decision function, the parties would have no need for sample adjudications. Instead, they could directly deploy commercially produced, off-the-shelf algorithms designed to answer the individual questions at hand.[264] Such algorithms would provide quick and cheap answers to the question, “What would an average jury say about the validity and value of each class member’s claim?” Those answers could be transposed directly into settlement agreements, since settling parties have little reason to believe that their jury would be different from the average one.

The introduction of AI class actions could also help facilitate earlier settlement, helping parties avoid wasteful litigation. Under the current paradigm, certification is often uncertain, making it difficult for parties to agree at a lawsuit’s outset on a settlement value. Putative class actions are therefore often litigated to the point of a certification ruling, with settlement becoming a near-certainty immediately thereafter.[265] And because class certification decisions often involve a hard look at the merits, such litigation can be extremely costly.[266] If AI class actions render some set of questionable cases clearly certifiable, then parties in those cases will be able to settle confidently at the outset, avoiding significant waste.

III. Can AI Class Actions Overcome the Wal-Mart Problem?

Assume that, for some significant set of currently uncertifiable classes, machine learning could feasibly be employed in litigation to efficiently resolve individual questions, allowing common ones to predominate. Would such a system pass muster under Wal-Mart and its progeny? This Part argues that the answer is yes; the proposal would survive in either a strong or a slightly weakened form.

A. AI Class Actions Are More Than Accurate Enough to Satisfy Wal-Mart

If, as argued above, values relating to individual accuracy animate Wal-Mart and its progeny, then the question for AI class actions is how accurate they are. Specifically, would AI class actions be at least as accurate—at the individual level—as the methods of statistical proof the Supreme Court allows?

Assume, for the moment, that algorithmic decisions in AI class actions would be, like jury determinations, the final word about individual questions. This is the simplest case to evaluate under Wal-Mart, since it offers no opportunity to correct potential errors in algorithmic outputs. A more complex version of AI class actions—where algorithmic determinations operate as mere presumptions that are challengeable by either party—is analyzed below.[267]

Even in this simple version of the proposal, AI class actions would be very accurate. Accuracy is the whole point of advanced machine learning algorithms. One way to understand the difference between cutting-edge algorithms and older-fashioned linear regression is that the former increases accuracy—often dramatically—while sacrificing interpretability.[268]

Just how accurate would machine-learning-driven resolutions of individual questions be? There is no way to give a precise figure, in advance, as to every conceivable individual question. But we have guideposts. The designers of the Blue J algorithm reported in 2017 that “[i]n out-of-sample testing, in a variety of different questions in tax law, Blue J consistently gets more than 90% of predictions correct.”[269] Since many individual questions in class actions are similar in nature to those that Blue J predicts,[270] we might expect similar performance.[271] Similarly, classification algorithms regularly achieve accuracy approaching 100% across a range of at least moderately difficult non-legal questions.[272] It seems likely, then, that for many run-of-the-mill individual questions in class litigation, algorithms will be able to produce correct results nearly all the time.[273]

Would an algorithm that could correctly predict a jury’s decisions about individual questions over ninety percent of the time be good enough? Obviously, neither the Wal-Mart Court nor any other has articulated a clear cutoff. But examining the differences between disallowed statistical proof—like the regressions in Wal-Mart—and allowed proof—like the market analysis in Halliburton—provides substantial guidance.

The Wal-Mart Court believed that the accuracy of the plaintiffs’ regression analysis might be very low, indeed. Again, the Court understood the analysis to show only aggregate gender disparities at the level of forty-one U.S. corporate regions—each containing, on average, tens of thousands of employees.[274] This, the Court thought, left opportunity for high aggregation bias: “A regional pay disparity, for example, may be attributable to only a small set of Wal–Mart stores” or managers.[275] And absent a unified system for determining pay, “almost all of [the managers] will claim to have been applying some sex-neutral, performance-based criteria[.]”[276] Ultimately, the Court thought that such arguments, made by “almost all” of the managers would be highly plausible: “[L]eft to their own devices most managers in any corporation . . . would select sex-neutral, performance-based criteria for hiring and promotion that produce no actionable disparity at all.”[277] In sum, the Court thought that the statistical evidence left open the possibility of getting most liability determinations wrong. And the proposed causal story, the Court determined, made that possibility likely.[278]

Contrast this with the fraud-on-the-market proof in Halliburton. There, the Court credited recent economic scholarship showing that markets are not perfectly efficient.[279] It likewise acknowledged that not all investors rely on the efficiency of market prices to transmit information—including alleged misrepresentations—to them.[280] Yet, despite these potential sources of error, the Court determined that actual error rates would be quite low. This was because, the Court thought, “market professionals generally consider most publicly announced material statements about companies, thereby affecting stock market prices.”[281] Likewise, “it is reasonable to presume that most investors—knowing that they have little hope of outperforming the market . . . —will rely on the security’s market price.”[282] Thus, the Court judged the fraud-on-the-market presumption largely accurate, with defendants needing only to “pick off the occasional class member here or there through individualized rebuttal.”[283]

Compared against these two examples, AI class actions look quite good. If algorithms can resolve class members’ individual questions with accuracy rates in the high nineties, they look much more like Halliburton than Wal-Mart. Their decisions would not be “most[ly]” wrong,[284] but—by a significant margin—“most[ly]” right.[285] Indeed, they would be much better than that. Their low error rates would produce wrong results only for “the occasional class member here or there.”[286]

Taking a more theoretical view, we should think about cutting-edge algorithms as being at least as accurate as the very best traditional statistical models. Again, the whole point of them is to significantly outperform, for example, traditional regression analyses[287] at classification and quantification in individual cases.[288] And again, algorithms do this by enabling extreme nonlinearity in their decision functions.[289] That is, they can account for substantially more complicated relationships between inputs and outputs, generating individual answers that, in general, lie significantly closer to the truth.[290] Thus, if the Supreme Court endorses, for example, well-calibrated regression analysis, so too should it endorse machine-learning-based proof.

B. Weak AI Class Actions Provide Even More Bang-for-the-Buck

The preceding examination of AI class actions’ legality assumed that algorithmic answers to individual questions would be the final word on those questions. It argued that such a system would survive scrutiny under Wal-Mart. Nevertheless, some readers may have lingering doubts. And even those who accept that the above-described version of the proposal would survive Wal-Mart scrutiny might nevertheless wonder whether it could be further improved.

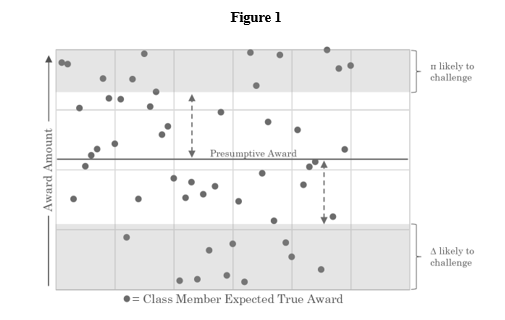

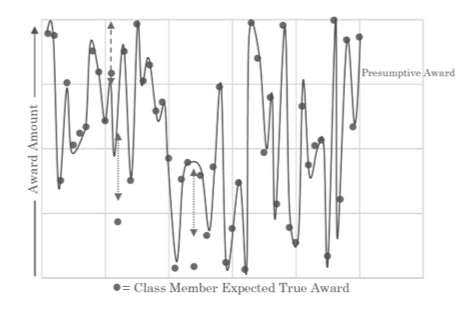

This subpart explores a modified, weaker version of the AI class action proposal designed to allay any lingering concerns. The weaker version promotes marginal improvements in individual accuracy, further shoring up the argument under Wal-Mart. And at the same time, the weakened proposal makes improvements in terms of pure process values like legitimacy and autonomy. Although those values are not what drives acceptances and rejections of statistical proof under Wal-Mart, they are nevertheless important. Thus, it makes sense to promote them when doing so requires little sacrifice elsewhere.

The weakened proposal borrows a design feature from a few of the cases in Wal-Mart’s lineage: rebuttable individual presumptions. Observe that the methods of statistical proof allowed after Wal-Mart fall into two categories. Sometimes, statistically derived answers to individual questions are the final word. Tyson authorized the use of average donning and doffing times because no evidence of individual times existed.[291] Thus, the only options were to apply the average answer to every class member or to reject it wholesale.[292] Similarly, in antitrust, most courts do not permit defendants to rebut statistical proof of antitrust injury on a plaintiff-by-plaintiff basis.[293] But other permissible methods of statistical proof create presumptions that may be individually rebutted. Fraud-on-the-market and Title VII disparate impact work this way.[294] Defendants are entitled to present evidence that any individual plaintiff did not, respectively, actually rely on efficient market prices or suffer an injury from a discriminatory policy.[295]

Similarly, the weak version of AI class actions would treat algorithmic answers to individual questions as rebuttable presumptions.[296] Either party might challenge them. Defendants might challenge an individual finding of liability or argue that damages should be lower, and plaintiffs would do the opposite. Parties would mount their challenges in precisely the same manner as they would in, say, a Title VII disparate impact case. The challenging party would bear a burden of production, requiring a showing sufficient to persuade a reasonable jury that the algorithm’s decision was wrong.[297] Such a showing might include the introduction of new evidence. Or it could amount to a particularized argument that the algorithm misinterpreted the existing evidence. Defendants could also raise affirmative defenses applicable only to individual class members. Whenever a challenger carried its burden of production, the challenge would be litigated to judgment via normal procedures, potentially terminating in a jury decision.